CV / Object Detection

2207.10758 DEVIANT: Depth EquiVarIAnt NeTwork for Monocular 3D Object Detection

Arxiv Link: http://arxiv.org/abs/2207.10758

Github Link: https://github.com/abhi1kumar/deviant

Authors: Abhinav Kumar Garrick Brazil

Enrique Corona Armin Parchami Xiaoming

Liu

Tag: object detection neural network

2207.10774 Focused Decoding Enables 3D Anatomical Detection by Transforme

Arxiv Link: http://arxiv.org/abs/2207.10774

Github Link: https://github.com/bwittmann/transoar

Authors: Bastian Wittmann Fernando Navarro

Suprosanna Shit Bjoern Menze

Tag: object detection attention mechanism

vision transformer transformer encoder

encoder-decoder architecture

2207.10936 Long-tailed Instance Segmentation using Gumbel Optimized Lo

Arxiv Link: http://arxiv.org/abs/2207.10936

Github Link: https://github.com/kostas1515/gol

Authors: Konstantinos Panagiotis Alexandridis

Jiankang Deng Anh Nguyen Shan

Luo

Tag: instance segmentation object

detection

2207.11031 MobileDenseNet: A new approach to object detection on mobile device

Arxiv Link: http://arxiv.org/abs/2207.11031

Github Link: https://github.com/hajizadeh/mobiledensenet

Authors: Mohammad Hajizadeh Mohammad

Sabokrou Adel Rahmani

Tag: real-time pascal voc source

code object detection

2207.11103 DeVIS: Making Deformable Transformers Work for Video Instance Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11103

Github Link: https://github.com/acaelles97/devis

Authors: Adrià Caelles Tim Meinhardt

Guillem Brasó Laura Leal-Taixé

Tag: video instance segmentation video

sequence feature map object detection

instance segmentation segmentation task

2207.11184 Multi-Faceted Distillation of Base-Novel Commonality for Few-shot Object Detection

Arxiv Link: http://arxiv.org/abs/2207.11184

Github Link: https://github.com/wushuang1998/mfdc

Authors: Shuang Wu Wenjie Pei Dianwen

Mei Fanglin Chen Jiandong Tian

Tag: memory bank object detection

object detector

2207.08531 ID-M3D: Decoupling Instance Depth for Monocular 3D Object Detection

Arxiv Link: http://arxiv.org/abs/2207.08531

Github Link: https://github.com/spengliang/did-m3d

Authors: Liang Peng Xiaopei Wu Zheng

Yang Haifeng Liu Deng Cai

Tag: depth estimation object detection

data augmentation ablation study

2207.11368 Neural-Sim: Learning to Generate Training Data with NeRF

Arxiv Link: http://arxiv.org/abs/2207.11368

Github Link: https://github.com/gyhandy/neural-sim-nerf

Authors: Yunhao Ge Harkirat Behl

Jiashu Xu Suriya Gunasekar Neel

Joshi

Tag: computer vision model computer vision

detection task object detection vision

model application scenario synthetic

data

2207.11455 UC-OWOD: Unknown-Classified Open World Object Detection

Arxiv Link: http://arxiv.org/abs/2207.11455

Github Link: https://github.com/johnwuzh/uc-owod

Authors: Zhiheng Wu Yue Lu Xingyu

Chen Zhengxing Wu Liwen Kang

Tag: object detection computer vision

object detector

2207.11753 Label-Guided Auxiliary Training Improves 3D Object Detecto

Arxiv Link: http://arxiv.org/abs/2207.11753

Github Link: https://github.com/fabiencode/lg3d

Authors: Yaomin Huang Xinmei Liu

Yichen Zhu Zhiyuan Xu Chaomin

Shen

Tag: object detection point cloud

object detector bounding box

2207.12654 ProposalContrast: Unsupervised Pre-training for LiDAR-based 3D Object Detection

Arxiv Link: http://arxiv.org/abs/2207.12654

Github Link: https://github.com/yinjunbo/proposalcontrast

Authors: Junbo Yin Dingfu Zhou

Liangjun Zhang Jin Fang Cheng-Zhong

Xu

Tag: object detection point cloud

receptive field

2207.12655 Semi-supervised 3D Object Detection with Proficient Teache

Arxiv Link: http://arxiv.org/abs/2207.12655

Github Link: https://github.com/yinjunbo/proficientteachers

Authors: Junbo Yin Jin Fang Dingfu

Zhou Liangjun Zhang Cheng-Zhong Xu

Tag: teacher model object detection

contrastive learning supervised learning

point cloud pseudo label autonomous

driving object detector

2207.12716 MV-FCOS3D++: Multi-View Camera-Only 4D Object Detection with Pretrained Monocular Backbone

Arxiv Link: http://arxiv.org/abs/2207.12716

Github Link: https://github.com/tai-wang/depth-from-motion

Authors: Tai Wang Qing Lian Chenming

Zhu Xinge Zhu Wenwei Zhang

Tag: technical report object detection

2207.12988 Monocular 3D Object Detection with Depth from Motion

Arxiv Link: http://arxiv.org/abs/2207.12988

Github Link: https://github.com/tai-wang/depth-from-motion

Authors: Tai Wang Jiangmiao Pang

Dahua Lin

Tag: depth estimation object detection

camera pose

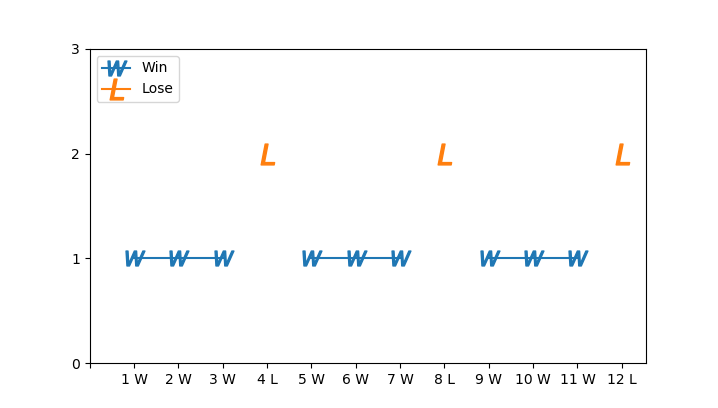

2207.13339 ALBench: A Framework for Evaluating Active Learning in Object Detection

Arxiv Link: http://arxiv.org/abs/2207.13339

Github Link: https://github.com/industryessentials/ymir

Authors: Zhanpeng Feng Shiliang Zhang

Rinyoichi Takezoe Wenze Hu Manmohan

Chandraker

Tag: neural architecture search active

learning neural network architecture neural

network object detection image

classification network architecture neural

architecture

2207.13362 mouflaged Object Detection via Context-aware Cross-level Fusion

Arxiv Link: http://arxiv.org/abs/2207.13362

Github Link: https://github.com/ben57882/c2fnet-tscvt

Authors: Geng Chen Si-Jie Liu Yu-Jia

Sun Ge-Peng Ji Ya-Feng Wu

Tag: benchmark dataset object detection

feature representation

2207.14172 Semantic-Aligned Matching for Enhanced DETR Convergence and Multi-Scale Feature Fusion

Arxiv Link: http://arxiv.org/abs/2207.14172

Github Link: https://github.com/zhanggongjie/sam-detr

Authors: Gongjie Zhang Zhipeng Luo

Yingchen Yu Jiaxing Huang Kaiwen

Cui

Tag: object detection detection performance

feature fusion detection task

2207.14221 Humans disagree with the IoU for measuring object detector localization erro

Arxiv Link: http://arxiv.org/abs/2207.14221

Github Link: https://github.com/ombretta/humans_vs_iou

Authors: Ombretta Strafforello Vanathi

Rajasekart Osman S. Kayhan Oana Inel

Jan van Gemert

Tag: object detector

2207.14284 HorNet: Efficient High-Order Spatial Interactions with Recursive Gated Convolution

Arxiv Link: http://arxiv.org/abs/2207.14284

Github Link: https://github.com/raoyongming/hornet

Authors: Yongming Rao Wenliang Zhao

Yansong Tang Jie Zhou Ser-Nam

Lim

Tag: self-attention object detection

swin transformer imagenet classification

semantic segmentation vision transformer ## CV

/ 3D

2207.10762 MeshLoc: Mesh-Based Visual Localization

Arxiv Link: http://arxiv.org/abs/2207.10762

Github Link: https://github.com/tsattler/meshloc_release

Authors: Vojtech Panek Zuzana Kukelova

Torsten Sattler

Tag: pose estimation feature matching

autonomous robot point cloud camera

pose 3d model

2207.11554 Towards Open Set 3D Learning: A Benchmark on Object Point Cloud

Arxiv Link: http://arxiv.org/abs/2207.11554

Github Link: https://github.com/antoalli/3d_os

Authors: Antonio Alliegro Francesco Cappio

Borlino Tatiana Tommasi

Tag: autonomous system point cloud

safety-critical application 3d model

2207.11790 PatchRD: Detail-Preserving Shape Completion by Learning Patch Retrieval and Deformation

Arxiv Link: http://arxiv.org/abs/2207.11790

Github Link: https://github.com/gitbosun/patchrd

Authors: Bo Sun Vladimir G. Kim Noam

Aigerman Qixing Huang Siddhartha

Chaudhuri

Tag: generative method 3d shape

neural network

2207.12485 3D Shape Sequence of Human Comparison and Classification using Current and Varifold

Arxiv Link: http://arxiv.org/abs/2207.12485

Github Link: https://github.com/cristal-3dsam/humancomparisonvarifolds

Authors: Emery Pierson Mohamed Daoudi

Sylvain Arguillere

Tag: 3d shape ## CV / Point Cloud

2207.10762 MeshLoc: Mesh-Based Visual Localization

Arxiv Link: http://arxiv.org/abs/2207.10762

Github Link: https://github.com/tsattler/meshloc_release

Authors: Vojtech Panek Zuzana Kukelova

Torsten Sattler

Tag: pose estimation feature matching

autonomous robot point cloud camera

pose 3d model

2207.11484 GraphFit: Learning Multi-scale Graph-Convolutional Representation for Point Cloud Normal Estimation

Arxiv Link: http://arxiv.org/abs/2207.11484

Github Link: https://github.com/uestcjay/graphfit

Authors: Keqiang Li Mingyang Zhao

Huaiyu Wu Dong-Ming Yan Zhen

Shen

Tag: 3d point cloud benchmark dataset

attention mechanism point cloud feature

representation

2207.11554 Towards Open Set 3D Learning: A Benchmark on Object Point Cloud

Arxiv Link: http://arxiv.org/abs/2207.11554

Github Link: https://github.com/antoalli/3d_os

Authors: Antonio Alliegro Francesco Cappio

Borlino Tatiana Tommasi

Tag: autonomous system point cloud

safety-critical application 3d model

2207.11753 Label-Guided Auxiliary Training Improves 3D Object Detecto

Arxiv Link: http://arxiv.org/abs/2207.11753

Github Link: https://github.com/fabiencode/lg3d

Authors: Yaomin Huang Xinmei Liu

Yichen Zhu Zhiyuan Xu Chaomin

Shen

Tag: object detection point cloud

object detector bounding box

2207.12654 ProposalContrast: Unsupervised Pre-training for LiDAR-based 3D Object Detection

Arxiv Link: http://arxiv.org/abs/2207.12654

Github Link: https://github.com/yinjunbo/proposalcontrast

Authors: Junbo Yin Dingfu Zhou

Liangjun Zhang Jin Fang Cheng-Zhong

Xu

Tag: object detection point cloud

receptive field

2207.12655 Semi-supervised 3D Object Detection with Proficient Teache

Arxiv Link: http://arxiv.org/abs/2207.12655

Github Link: https://github.com/yinjunbo/proficientteachers

Authors: Junbo Yin Jin Fang Dingfu

Zhou Liangjun Zhang Cheng-Zhong Xu

Tag: teacher model object detection

contrastive learning supervised learning

point cloud pseudo label autonomous

driving object detector

2207.12691 ENet: Toward Concise and Efficient LiDAR Semantic Segmentation for Autonomous Driving

Arxiv Link: http://arxiv.org/abs/2207.12691

Github Link: https://github.com/huixiancheng/cenet

Authors: Hui-Xian Cheng Xian-Feng Han

Guo-Qiang Xiao

Tag: segmentation network activation

function semantic segmentation point

cloud autonomous driving lidar point

cloud

2207.14268 MonteBoxFinder: Detecting and Filtering Primitives to Fit a Noisy Point Cloud

Arxiv Link: http://arxiv.org/abs/2207.14268

Github Link: https://github.com/michaelramamonjisoa/monteboxfinder

Authors: Michaël Ramamonjisoa Sinisa

Stekovic Vincent Lepetit

Tag: point cloud optimization algorithm ##

CV / Pose Estimation

2207.10762 MeshLoc: Mesh-Based Visual Localization

Arxiv Link: http://arxiv.org/abs/2207.10762

Github Link: https://github.com/tsattler/meshloc_release

Authors: Vojtech Panek Zuzana Kukelova

Torsten Sattler

Tag: pose estimation feature matching

autonomous robot point cloud camera

pose 3d model

2207.10955 Faster VoxelPose: Real-time 3D Human Pose Estimation by Orthographic Projection

Arxiv Link: http://arxiv.org/abs/2207.10955

Github Link: https://github.com/AlvinYH/Faster-VoxelPose

Authors: Hang Ye Wentao Zhu Chunyu

Wang Rujie Wu Yizhou Wang

Tag: real-time pose estimation human

pose estimation bounding box

2207.12537 Live Stream Temporally Embedded 3D Human Body Pose and Shape Estimation

Arxiv Link: http://arxiv.org/abs/2207.12537

Github Link: https://github.com/ostadabbas/tepose

Authors: Zhouping Wang Sarah Ostadabbas

Tag: motion estimation human pose

estimation pose estimation graph convolutional

network real-time convolutional network

adversarial training human body human

behavior

2207.13264 Instance-specific 6-DoF Object Pose Estimation from Minimal Annotation

Arxiv Link: http://arxiv.org/abs/2207.13264

Github Link: https://github.com/rohanpsingh/objectkeypointtrainer

Authors: Rohan Pratap Singh Iori Kumagai

Antonio Gabas Mehdi Benallegue Yusuke

Yoshiyasu

Tag: deep neural network neural network

pose estimation lighting condition

camera pose

2207.13784 AvatarPoser: Articulated Full-Body Pose Tracking from Sparse Motion Sensing

Arxiv Link: http://arxiv.org/abs/2207.13784

Github Link: https://github.com/eth-siplab/avatarposer

Authors: Jiaxi Jiang Paul Streli

Huajian Qiu Andreas Fender Larissa

Laich

Tag: pose estimation real-time

transformer encoder learning-based method ##

RL / Reinforcement Learning

2207.10763 Sim-to-real Deep Reinforcement Learning for Comparing Low-cost High-Resolution Robot Touch

Arxiv Link: http://arxiv.org/abs/2207.10763

Github Link: https://github.com/ac-93/tactile_gym

Authors: Yijiong Lin John Lloyd Alex

Church Nathan F. Lepora

Tag: deep reinforcement learning reinforcement

learning

2207.11432 Driver Dojo: A Benchmark for Generalizable Reinforcement Learning for Autonomous Driving

Arxiv Link: http://arxiv.org/abs/2207.11432

Github Link: https://github.com/seawee1/driver-dojo

Authors: Sebastian Rietsch Shih-Yuan Huang

Georgios Kontes Axel Plinge Christopher

Mutschler

Tag: autonomous driving reinforcement

learning

2207.11584 Hierarchical Kickstarting for Skill Transfer in Reinforcement Learning

Arxiv Link: http://arxiv.org/abs/2207.11584

Github Link: https://github.com/ucl-dark/skillhack

Authors: Michael Matthews Mikayel Samvelyan

Jack Parker-Holder Edward Grefenstette

Tim Rocktäschel

Tag: reinforcement learning inductive bias

sparse reward reward function rl

agent

2207.12644 Learning Bipedal Walking On Planned Footsteps For Humanoid Robot

Arxiv Link: http://arxiv.org/abs/2207.12644

Github Link: https://github.com/rohanpsingh/learninghumanoidwalking

Authors: Rohan Pratap Singh Mehdi

Benallegue Mitsuharu Morisawa Rafael

Cisneros Fumio Kanehiro

Tag: curriculum learning deep reinforcement

learning reinforcement learning simulation

environment

2207.13249 AADG: Automatic Augmentation for Domain Generalization on Retinal Image Segmentation

Arxiv Link: http://arxiv.org/abs/2207.13249

Github Link: https://github.com/crazorback/aadg

Authors: Junyan Lyu Yiqi Zhang Yijin

Huang Li Lin Pujin Cheng

Tag: source code reinforcement learning

convolutional neural network generalization

performance neural network segmentation

task deep reinforcement learning domain

generalization data augmentation image

segmentation medical image medical image

segmentation adversarial training

2207.13453 Safe and Robust Experience Sharing for Deterministic Policy Gradient Algorithm

Arxiv Link: http://arxiv.org/abs/2207.13453

Github Link: https://github.com/baturaysaglam/dase

Authors: Baturay Saglam Dogan C. Cicek

Furkan B. Mutlu Suleyman S. Kozat

Tag: deep reinforcement learning reinforcement

learning ## CV / Transformer

2207.10774 Focused Decoding Enables 3D Anatomical Detection by Transforme

Arxiv Link: http://arxiv.org/abs/2207.10774

Github Link: https://github.com/bwittmann/transoar

Authors: Bastian Wittmann Fernando Navarro

Suprosanna Shit Bjoern Menze

Tag: object detection attention mechanism

vision transformer transformer encoder

encoder-decoder architecture

2207.11860 Behind Every Domain There is a Shift: Adapting Distortion-aware Vision Transformers for Panoramic Semantic Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11860

Github Link: https://github.com/jamycheung/trans4pass

Authors: Jiaming Zhang Kailun Yang

Hao Shi Simon Reiß Kunyu Peng

Tag: unsupervised domain adaptation semantic

segmentation domain adaptation vision

transformer

2207.14284 HorNet: Efficient High-Order Spatial Interactions with Recursive Gated Convolution

Arxiv Link: http://arxiv.org/abs/2207.14284

Github Link: https://github.com/raoyongming/hornet

Authors: Yongming Rao Wenliang Zhao

Yansong Tang Jie Zhou Ser-Nam

Lim

Tag: self-attention object detection

swin transformer imagenet classification

semantic segmentation vision transformer ## CV

/ Medical Image

2207.10804 Suppressing Poisoning Attacks on Federated Learning for Medical Imaging

Arxiv Link: http://arxiv.org/abs/2207.10804

Github Link: https://github.com/naiftt/spafd

Authors: Naif Alkhunaizi Dmitry Kamzolov

Martin Takáč Karthik Nandakumar

Tag: federated learning medical imaging

privacy concern

2207.11553 High-Resolution Swin Transformer for Automatic Medical Image Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11553

Github Link: https://github.com/auroua/hrstnet

Authors: Chen Wei Shenghan Ren Kaitai

Guo Haihong Hu Jimin Liang

Tag: u-net feature map transformer

network swin transformer image

segmentation medical image medical image

segmentation

2207.11581 Self-Supervised Learning of Echocardiogram Videos Enables Data-Efficient Clinical Diagno

Arxiv Link: http://arxiv.org/abs/2207.11581

Github Link: https://github.com/cards-yale/echo-ssl-aortic-stenosis

Authors: Gregory Holste Evangelos K.

Oikonomou Bobak Mortazavi Zhangyang

Wang Rohan Khera

Tag: supervised learning deep learning

medical image transfer learning

representation learning self-supervised

learning

2207.11683 PCA: Semi-supervised Segmentation with Patch Confidence Adversarial Training

Arxiv Link: http://arxiv.org/abs/2207.11683

Github Link: https://github.com/HiLab-git/SSL4MIS

Authors: Zihang Xu Zhenghua Xu Shuo

Zhang Thomas Lukasiewicz

Tag: segmentation performance supervised

learning semantic information deep

learning classification result image

segmentation medical image medical image

segmentation adversarial training

2207.12872 Generalized Probabilistic U-Net for medical image segementation

Arxiv Link: http://arxiv.org/abs/2207.12872

Github Link: https://github.com/ishaanb92/generalizedprobabilisticunet

Authors: Ishaan Bhat Josien P.W. Pluim

Hugo J. Kuijf

Tag: u-net medical image gaussian

distribution latent space

2207.13249 AADG: Automatic Augmentation for Domain Generalization on Retinal Image Segmentation

Arxiv Link: http://arxiv.org/abs/2207.13249

Github Link: https://github.com/crazorback/aadg

Authors: Junyan Lyu Yiqi Zhang Yijin

Huang Li Lin Pujin Cheng

Tag: source code reinforcement learning

convolutional neural network generalization

performance neural network segmentation

task deep reinforcement learning domain

generalization data augmentation image

segmentation medical image medical image

segmentation adversarial training

2207.13415 TransNorm: Transformer Provides a Strong Spatial Normalization Mechanism for a Deep Segmentation Model

Arxiv Link: http://arxiv.org/abs/2207.13415

Github Link: https://github.com/rezazad68/transnorm

Authors: Reza Azad Mohammad T. AL-Antary

Moein Heidari Dorit Merhof

Tag: self-attention convolutional neural

network neural network u-net

segmentation task attention mechanism

feature fusion segmentation model image

segmentation medical image medical image

segmentation ## GNN / Graph Network

2207.10860 Transformer with Implicit Edges for Particle-based Physics Simulation

Arxiv Link: http://arxiv.org/abs/2207.10860

Github Link: https://github.com/ftbabi/tie_eccv2022

Authors: Yidi Shao Chen Change Loy Bo

Dai

Tag: self-attention graph neural network

attention module neural network

2207.11088 Layer-refined Graph Convolutional Networks for Recommendation

Arxiv Link: http://arxiv.org/abs/2207.11088

Github Link: https://github.com/enoche/imrec

Authors: Xin Zhou Donghui Lin Yong

Liu Chunyan Miao

Tag: fixed point graph convolutional

network convolutional network topological

structure

2207.11247 Panoptic Scene Graph Generation

Arxiv Link: http://arxiv.org/abs/2207.11247

Github Link: https://github.com/Jingkang50/OpenPSG

Authors: Jingkang Yang Yi Zhe Ang

Zujin Guo Kaiyang Zhou Wayne

Zhang

Tag: bounding box panoptic segmentation

graph representation

2207.12537 Live Stream Temporally Embedded 3D Human Body Pose and Shape Estimation

Arxiv Link: http://arxiv.org/abs/2207.12537

Github Link: https://github.com/ostadabbas/tepose

Authors: Zhouping Wang Sarah Ostadabbas

Tag: motion estimation human pose

estimation pose estimation graph convolutional

network real-time convolutional network

adversarial training human body human

behavior

2207.11996 enerative Subgraph Contrast for Self-Supervised Graph Representation Learning

Arxiv Link: http://arxiv.org/abs/2207.11996

Github Link: https://github.com/yh-han/gsc

Authors: Yuehui Han Le Hui Haobo

Jiang Jianjun Qian Jin Xie

Tag: graph representation contrastive loss

node classification benchmark dataset

contrastive learning wasserstein distance

representation learning learning framework

2207.13262 Factorial User Modeling with Hierarchical Graph Neural Network for Enhanced Sequential Recommendation

Arxiv Link: http://arxiv.org/abs/2207.13262

Github Link: https://github.com/xlx0010/hgnn

Authors: Lyuxin Xue Deqing Yang

Yanghua Xiao

Tag: user preference graph neural network

neural network ## CV / Instance Segmentation

2207.10936 Long-tailed Instance Segmentation using Gumbel Optimized Lo

Arxiv Link: http://arxiv.org/abs/2207.10936

Github Link: https://github.com/kostas1515/gol

Authors: Konstantinos Panagiotis Alexandridis

Jiankang Deng Anh Nguyen Shan

Luo

Tag: instance segmentation object

detection

2207.11103 DeVIS: Making Deformable Transformers Work for Video Instance Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11103

Github Link: https://github.com/acaelles97/devis

Authors: Adrià Caelles Tim Meinhardt

Guillem Brasó Laura Leal-Taixé

Tag: video instance segmentation video

sequence feature map object detection

instance segmentation segmentation task

2207.12824 ompositional Human-Scene Interaction Synthesis with Semantic Control

Arxiv Link: http://arxiv.org/abs/2207.12824

Github Link: https://github.com/zkf1997/coins

Authors: Kaifeng Zhao Shaofei Wang

Yan Zhang Thabo Beeler Siyu

Tang

Tag: generative model instance segmentation

latent space human body ## CV / Semantic

Segmentation

2207.11102 Physiology-based simulation of the retinal vasculature enables annotation-free segmentation of OCT angiograph

Arxiv Link: http://arxiv.org/abs/2207.11102

Github Link: https://github.com/iMED-Lab/OCTA-Net-OCTA-Vessel-Segmentation-Network

Authors: Martin J. Menten Johannes C.

Paetzold Alina Dima Bjoern H. Menze

Benjamin Knier

Tag: semantic segmentation learning-based

method synthetic data

2207.11549 Self-Support Few-Shot Semantic Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11549

Github Link: https://github.com/fanq15/ssp

Authors: Qi Fan Wenjie Pei Yu-Wing

Tai Chi-Keung Tang

Tag: semantic segmentation

2207.11722 Semantic-guided Multi-Mask Image Harmonization

Arxiv Link: http://arxiv.org/abs/2207.11722

Github Link: https://github.com/xuqianren/semantic-guided-multi-mask-image-harmonization

Authors: Xuqian Ren Yifan Liu

Tag: semantic segmentation segmentation

mask

2207.12546 The Bearable Lightness of Big Data: Towards Massive Public Datasets in Scientific Machine Learning

Arxiv Link: http://arxiv.org/abs/2207.12546

Github Link: https://github.com/ihmegroup/lossy_ml

Authors: Wai Tong Chung Ki Sung Jung

Jacqueline H. Chen Matthias Ihme

Tag: semantic segmentation deep

learning

2207.12691 ENet: Toward Concise and Efficient LiDAR Semantic Segmentation for Autonomous Driving

Arxiv Link: http://arxiv.org/abs/2207.12691

Github Link: https://github.com/huixiancheng/cenet

Authors: Hui-Xian Cheng Xian-Feng Han

Guo-Qiang Xiao

Tag: segmentation network activation

function semantic segmentation point

cloud autonomous driving lidar point

cloud

2207.13297 GPS-GLASS: Learning Nighttime Semantic Segmentation Using Daytime Video and GPS dat

Arxiv Link: http://arxiv.org/abs/2207.13297

Github Link: https://github.com/jimmy9704/gps-glass

Authors: Hongjae Lee Changwoo Han

Seung-Won Jung

Tag: segmentation network source code

semantic segmentation autonomous driving

video frame

2207.13600 Lightweight and Progressively-Scalable Networks for Semantic Segmentation

Arxiv Link: http://arxiv.org/abs/2207.13600

Github Link: https://github.com/yihengzhang-cv/lps-net

Authors: Yiheng Zhang Ting Yao

Zhaofan Qiu Tao Mei

Tag: semantic segmentation learning

framework

2207.11860 Behind Every Domain There is a Shift: Adapting Distortion-aware Vision Transformers for Panoramic Semantic Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11860

Github Link: https://github.com/jamycheung/trans4pass

Authors: Jiaming Zhang Kailun Yang

Hao Shi Simon Reiß Kunyu Peng

Tag: unsupervised domain adaptation semantic

segmentation domain adaptation vision

transformer

2207.14284 HorNet: Efficient High-Order Spatial Interactions with Recursive Gated Convolution

Arxiv Link: http://arxiv.org/abs/2207.14284

Github Link: https://github.com/raoyongming/hornet

Authors: Yongming Rao Wenliang Zhao

Yansong Tang Jie Zhou Ser-Nam

Lim

Tag: self-attention object detection

swin transformer imagenet classification

semantic segmentation vision transformer ## CV

/ Data Augmentation

2207.08531 ID-M3D: Decoupling Instance Depth for Monocular 3D Object Detection

Arxiv Link: http://arxiv.org/abs/2207.08531

Github Link: https://github.com/spengliang/did-m3d

Authors: Liang Peng Xiaopei Wu Zheng

Yang Haifeng Liu Deng Cai

Tag: depth estimation object detection

data augmentation ablation study

2207.12535 Semi-Leak: Membership Inference Attacks Against Semi-supervised Learning

Arxiv Link: http://arxiv.org/abs/2207.12535

Github Link: https://github.com/xinleihe/semi-leak

Authors: Xinlei He Hongbin Liu Neil

Zhenqiang Gong Yang Zhang

Tag: supervised learning data augmentation

data privacy

2207.12757 ontrollable User Dialogue Act Augmentation for Dialogue State Tracking

Arxiv Link: http://arxiv.org/abs/2207.12757

Github Link: https://github.com/miulab/cuda-dst

Authors: Chun-Mao Lai Ming-Hao Hsu

Chao-Wei Huang Yun-Nung Chen

Tag: data augmentation dialogue state

tracking

2207.11984 RA-Depth: Resolution Adaptive Self-Supervised Monocular Depth Estimation

Arxiv Link: http://arxiv.org/abs/2207.11984

Github Link: https://github.com/hmhemu/ra-depth

Authors: Mu He Le Hui Yikai

Bian Jian Ren Jin Xie

Tag: depth estimation data augmentation

data augmentation method

2207.13249 AADG: Automatic Augmentation for Domain Generalization on Retinal Image Segmentation

Arxiv Link: http://arxiv.org/abs/2207.13249

Github Link: https://github.com/crazorback/aadg

Authors: Junyan Lyu Yiqi Zhang Yijin

Huang Li Lin Pujin Cheng

Tag: source code reinforcement learning

convolutional neural network generalization

performance neural network segmentation

task deep reinforcement learning domain

generalization data augmentation image

segmentation medical image medical image

segmentation adversarial training

2207.14255 Efficient Training of Language Models to Fill in the Middle

Arxiv Link: http://arxiv.org/abs/2207.14255

Github Link: https://github.com/openai/human-eval-infilling

Authors: Mohammad Bavarian Heewoo Jun

Nikolas Tezak John Schulman Christine

McLeavey

Tag: data augmentation language model

autoregressive language model ## NLP / Relation

Extraction

2207.11433 Enhancing Document-level Relation Extraction by Entity Knowledge Injection

Arxiv Link: http://arxiv.org/abs/2207.11433

Github Link: https://github.com/nju-websoft/kire

Authors: Xinyi Wang Zitao Wang

Weijian Sun Wei Hu

Tag: relation extraction knowledge graph

benchmark dataset ## NLP / Knowledge Graph

2207.11433 Enhancing Document-level Relation Extraction by Entity Knowledge Injection

Arxiv Link: http://arxiv.org/abs/2207.11433

Github Link: https://github.com/nju-websoft/kire

Authors: Xinyi Wang Zitao Wang

Weijian Sun Wei Hu

Tag: relation extraction knowledge graph

benchmark dataset

2207.11436 Facing Changes: Continual Entity Alignment for Growing Knowledge Graph

Arxiv Link: http://arxiv.org/abs/2207.11436

Github Link: https://github.com/nju-websoft/contea

Authors: Yuxin Wang Yuanning Cui

Wenqiang Liu Zequn Sun Yiqiao

Jiang

Tag: knowledge graph

2207.11442 \(\mu\text{KG}\): A Library for Multi-source Knowledge Graph Embeddings and Application

Arxiv Link: http://arxiv.org/abs/2207.11442

Github Link: https://github.com/nju-websoft/mukg

Authors: Xindi Luo Zequn Sun Wei

Hu

Tag: link prediction downstream task

benchmark dataset question answering

knowledge graph deep learning

representation learning

2207.12888 LaKo: Knowledge-driven Visual Question Answering via Late Knowledge-to-Text Injection

Arxiv Link: http://arxiv.org/abs/2207.12888

Github Link: https://github.com/hackerchenzhuo/LaKo

Authors: Zhuo Chen Yufeng Huang

Jiaoyan Chen Yuxia Geng Yin

Fang

Tag: language model question answering

knowledge graph text generation ## NLP /

Question Answering

2207.11442 \(\mu\text{KG}\): A Library for Multi-source Knowledge Graph Embeddings and Application

Arxiv Link: http://arxiv.org/abs/2207.11442

Github Link: https://github.com/nju-websoft/mukg

Authors: Xindi Luo Zequn Sun Wei

Hu

Tag: link prediction downstream task

benchmark dataset question answering

knowledge graph deep learning

representation learning

2207.12576 WinoGAViL: Gamified Association Benchmark to Challenge Vision-and-Language Model

Arxiv Link: http://arxiv.org/abs/2207.12576

Github Link: https://github.com/winogavil/winogavil-experiments

Authors: Yonatan Bitton Nitzan Bitton

Guetta Ron Yosef Yuval Elovici

Mohit Bansal

Tag: question answering language model

2207.12888 LaKo: Knowledge-driven Visual Question Answering via Late Knowledge-to-Text Injection

Arxiv Link: http://arxiv.org/abs/2207.12888

Github Link: https://github.com/hackerchenzhuo/LaKo

Authors: Zhuo Chen Yufeng Huang

Jiaoyan Chen Yuxia Geng Yin

Fang

Tag: language model question answering

knowledge graph text generation

2207.13332 RealTime QA: What's the Answer Right Now?

Arxiv Link: http://arxiv.org/abs/2207.13332

Github Link: https://github.com/realtimeqa/realtimeqa_public

Authors: Jungo Kasai Keisuke Sakaguchi

Yoichi Takahashi Ronan Le Bras Akari

Asai

Tag: information retrieval question

answering language model real-time

pretrained language model qa system ## NLP /

Transformer

2207.11553 High-Resolution Swin Transformer for Automatic Medical Image Segmentation

Arxiv Link: http://arxiv.org/abs/2207.11553

Github Link: https://github.com/auroua/hrstnet

Authors: Chen Wei Shenghan Ren Kaitai

Guo Haihong Hu Jimin Liang

Tag: u-net feature map transformer

network swin transformer image

segmentation medical image medical image

segmentation

2207.11808 ArmanEmo: A Persian Dataset for Text-based Emotion Detection

Arxiv Link: http://arxiv.org/abs/2207.11808

Github Link: https://github.com/arman-rayan-sharif/arman-text-emotion

Authors: Hossein Mirzaee (1) Javad Peymanfard

(2) Hamid Habibzadeh Moshtaghin (3) Hossein

Zeinali (1) ((1) Amirkabir University of Technology (2)

Iran University of Science and Technology

Tag: transformer-based language model transfer

learning language model

2207.12661 Learning Visual Representation from Modality-Shared Contrastive Language-Image Pre-training

Arxiv Link: http://arxiv.org/abs/2207.12661

Github Link: https://github.com/hxyou/msclip

Authors: Haoxuan You Luowei Zhou Bin

Xiao Noel Codella Yu Cheng

Tag: downstream task vision task

multiple modality visual representation

imagenet classification transformer model ##

NLP / Pretrained language model

2207.13332 RealTime QA: What's the Answer Right Now?

Arxiv Link: http://arxiv.org/abs/2207.13332

Github Link: https://github.com/realtimeqa/realtimeqa_public

Authors: Jungo Kasai Keisuke Sakaguchi

Yoichi Takahashi Ronan Le Bras Akari

Asai

Tag: information retrieval question

answering language model real-time

pretrained language model qa system ## NLP /

Word Embedding

2207.13842 Dive into Machine Learning Algorithms for Influenza Virus Host Prediction with Hemagglutinin Sequence

Arxiv Link: http://arxiv.org/abs/2207.13842

Github Link: https://github.com/dkdjb/iav_host_prediction

Authors: Yanhua Xu Dominik Wojtczak

Tag: word embedding neural network ##

Others

2207.10719 Synthetic Dataset Generation for Adversarial Machine Learning Research

Arxiv Link: http://arxiv.org/abs/2207.10719

Github Link: https://github.com/carla-simulator/carla

Authors: Xiruo Liu Shibani Singh Cory

Cornelius Colin Busho Mike Tan

Tag: synthetic dataset cyber-physical

system adversarial example

2207.10732 Explainable AI Algorithms for Vibration Data-based Fault Detection: Use Case-adadpted Methods and Critical Evaluation

Arxiv Link: http://arxiv.org/abs/2207.10732

Github Link: https://github.com/o-mey/xai-vibration-fault-detection

Authors: Oliver Mey Deniz Neufeld

Tag: deep neural network convolutional neural

network neural network synthetic data

fourier transform explainable ai

2207.10765 Towards Interpretable Video Super-Resolution via Alternating Optimization

Arxiv Link: http://arxiv.org/abs/2207.10765

Github Link: https://github.com/caojiezhang/davsr

Authors: Jiezhang Cao Jingyun Liang

Kai Zhang Wenguan Wang Qin

Wang

Tag: motion blur video sequence

learning-based method

2207.10816 Mathematical Model of Strong Physically Unclonable Functions Based on Hybrid Boolean Network

Arxiv Link: http://arxiv.org/abs/2207.10816

Github Link: https://github.com/noeloikeau/networkm

Authors: Noeloikeau Charlot Daniel J.

Gauthier Daniel Canaday Andrew

Pomerance

Tag:

2207.10830 Automated Dilated Spatio-Temporal Synchronous Graph Modeling for Traffic Prediction

Arxiv Link: http://arxiv.org/abs/2207.10830

Github Link: https://github.com/jinguangyin/auto-dstsgn

Authors: Guangyin Jin Fuxian Li

Jinlei Zhang Mudan Wang Jincai

Huang

Tag: graph convolution source code

graph structure representation learning

2207.10852 Spatio-Temporal Deformable Attention Network for Video Deblurring

Arxiv Link: http://arxiv.org/abs/2207.10852

Github Link: https://github.com/huicongzhang/stdan

Authors: Huicong Zhang Haozhe Xie

Hongxun Yao

Tag: video frame

2207.10856 Prototype-Guided Continual Adaptation for Class-Incremental Unsupervised Domain Adaptation

Arxiv Link: http://arxiv.org/abs/2207.10856

Github Link: https://github.com/hongbin98/proca

Authors: Hongbin Lin Yifan Zhang Zhen

Qiu Shuaicheng Niu Chuang Gan

Tag: unsupervised domain adaptation domain

adaptation source code

2207.10869 Optimizing Image Compression via Joint Learning with Denoising

Arxiv Link: http://arxiv.org/abs/2207.10869

Github Link: https://github.com/felixcheng97/denoisecompression

Authors: Ka Leong Cheng Yueqi Xie

Qifeng Chen

Tag: source code

2207.10878 An Ensemble Approach for Multiple Emotion Descriptors Estimation Using Multi-task Learning

Arxiv Link: http://arxiv.org/abs/2207.10878

Github Link: https://github.com/tmtvaa/abaw4

Authors: Irfan Haider Minh-Trieu Tran

Soo-Hyung Kim Hyung-Jeong Yang Guee-Sang

Lee

Tag: attention mechanism

2207.10883 My View is the Best View: Procedure Learning from Egocentric Video

Arxiv Link: http://arxiv.org/abs/2207.10883

Github Link: https://github.com/Sid2697/EgoProceL-egocentric-procedure-learning

Authors: Siddhant Bansal Chetan Arora

C.V. Jawahar

Tag: source code

2207.10888 FairGRAPE: Fairness-aware GRAdient Pruning mEthod for Face Attribute Classification

Arxiv Link: http://arxiv.org/abs/2207.10888

Github Link: https://github.com/bernardo1998/fairgrape

Authors: Xiaofeng Lin Seungbae Kim

Jungseock Joo

Tag:

2207.10897 Efficient Modeling of Future Context for Image Captioning

Arxiv Link: http://arxiv.org/abs/2207.10897

Github Link: https://github.com/feizc/future-caption

Authors: Zhengcong Fei Junshi Huang

Xiaoming Wei Xiaolin Wei

Tag: ms coco source code

2207.10899 Decoupled Adversarial Contrastive Learning for Self-supervised Adversarial Robustne

Arxiv Link: http://arxiv.org/abs/2207.10899

Github Link: https://github.com/pantheon5100/deacl

Authors: Chaoning Zhang Kang Zhang

Chenshuang Zhang Axi Niu Jiu

Feng

Tag: robust representation contrastive

learning representation learning supervised

learning adversarial training self-supervised

learning

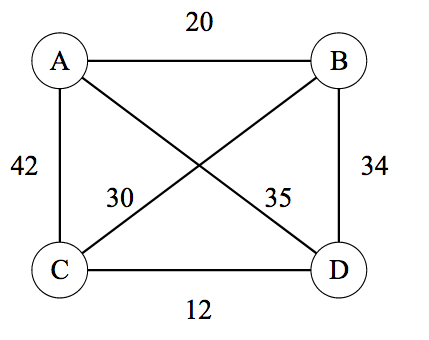

2207.10915 Optimization of Forcemyography Sensor Placement for Arm Movement Recognition

Arxiv Link: http://arxiv.org/abs/2207.10915

Github Link: https://github.com/jerryx1110/iros22-fmg-sensor-optimization

Authors: Xiaohao Xu Zihao Du Huaxin

Zhang Ruichao Zhang Zihan Hong

Tag: downstream task optimization algorithm

graph theory sensor placement

2207.10931 What's in the laundromat? Mapping and characterising offshore owned domestic property in London

Arxiv Link: http://arxiv.org/abs/2207.10931

Github Link: https://github.com/jonnob/empty_homes_london

Authors: Jonathan Bourne Andrea Ingianni

Rex McKenzie

Tag:

2207.10941 Respecting Time Series Properties Makes Deep Time Series Forecasting Perfect

Arxiv Link: http://arxiv.org/abs/2207.10941

Github Link: https://github.com/origamisl/rtnet

Authors: Li Shen Yuning Wei Yangzhu

Wang

Tag: benchmark dataset source code

time series

2207.10942 fficient Testing of Deep Neural Networks via Decision Boundary Analy

Arxiv Link: http://arxiv.org/abs/2207.10942

Github Link: https://github.com/anony4paper/aries

Authors: Qiang Hu Yuejun Guo Xiaofei

Xie Maxime Cordy Lei Ma

Tag: deep neural network decision boundary

neural network deep learning application

domain

2207.10947 Multilabel Prototype Generation for Data Reduction in k-Nearest Neighbour classification

Arxiv Link: http://arxiv.org/abs/2207.10947

Github Link: https://github.com/jose-jvmas/multilabel_pg

Authors: Jose J. Valero-Mas Antonio Javier

Gallego Pablo Alonso-Jiménez Xavier

Serra

Tag:

2207.10950 Scale dependant layer for self-supervised nuclei encoding

Arxiv Link: http://arxiv.org/abs/2207.10950

Github Link: https://github.com/peterjacknaylor/scaledependantcnn

Authors: Peter Naylor Yao-Hung Hubert Tsai

Marick Laé Makoto Yamada

Tag: supervised learning downstream task

unsupervised method self-supervised

learning

2207.11001 POP: Mining POtential Performance of new fashion products via webly cross-modal query expansion

Arxiv Link: http://arxiv.org/abs/2207.11001

Github Link: https://github.com/humaticslab/pop-mining-potential-performance

Authors: Christian Joppi Geri Skenderi

Marco Cristani

Tag: time series

2207.11012 Fact sheet: Automatic Self-Reported Personality Recognition Track

Arxiv Link: http://arxiv.org/abs/2207.11012

Github Link: https://github.com/gizemsogancioglu/fgm_utrecht

Authors: Francisca Pessanha Gizem

Sogancioglu

Tag: personality trait

2207.11018 Learning from what we know: How to perform vulnerability prediction using noisy historical dat

Arxiv Link: http://arxiv.org/abs/2207.11018

Github Link: https://github.com/garghub/trovon

Authors: Aayush Garg Renzo Degiovanni

Matthieu Jimenez Maxime Cordy Mike

Papadakis

Tag:

2207.11025 ustom Structure Preservation in Face Aging

Arxiv Link: http://arxiv.org/abs/2207.11025

Github Link: https://github.com/guillermogotre/cusp

Authors: Guillermo Gomez-Trenado (1) Stéphane

Lathuilière (2) Pablo Mesejo (1) Óscar Cordón

(1) ((1) DaSCI research institute DECSAI

Tag: user study pretrained model

2207.11056 Energy-Aware Planning-Scheduling for Autonomous Aerial Robot

Arxiv Link: http://arxiv.org/abs/2207.11056

Github Link: https://github.com/adamseew/energy-planning-paper

Authors: Adam Seewald Héctor García de

Marina Henrik Skov Midtiby Ulrik Pagh

Schultz

Tag:

2207.11075 RealFlow: EM-based Realistic Optical Flow Dataset Generation from Video

Arxiv Link: http://arxiv.org/abs/2207.11075

Github Link: https://github.com/megvii-research/realflow

Authors: Yunhui Han Kunming Luo Ao

Luo Jiangyu Liu Haoqiang Fan

Tag: synthetic dataset video frame

2207.11118 Rethinking the Reference-based Distinctive Image Captioning

Arxiv Link: http://arxiv.org/abs/2207.11118

Github Link: https://github.com/maoyj1998/transdic

Authors: Yangjun Mao Long Chen

Zhihong Jiang Dong Zhang Zhimeng

Zhang

Tag: reference image

2207.11120 On Controller Tuning with Time-Varying Bayesian Optimization

Arxiv Link: http://arxiv.org/abs/2207.11120

Github Link: https://github.com/brunzema/uitvbo

Authors: Paul Brunzema Alexander von Rohr

Sebastian Trimpe

Tag: optimal control bayesian optimization

gaussian processes numerical experiment

spatial dimension

2207.11163 Adaptive Soft Contrastive Learning

Arxiv Link: http://arxiv.org/abs/2207.11163

Github Link: https://github.com/mrchenfeng/ascl_icpr2022

Authors: Chen Feng Ioannis Patras

Tag: contrastive learning supervised

learning representation learning

self-supervised learning learning

framework

2207.11173 Verifying Fairness in Quantum Machine Learning

Arxiv Link: http://arxiv.org/abs/2207.11173

Github Link: https://github.com/veri-q/fairness

Authors: Ji Guan Wang Fang Mingsheng

Ying

Tag: quantum computing decision making

2207.11192 Progressive Deblurring of Diffusion Models for Coarse-to-Fine Image Synthe

Arxiv Link: http://arxiv.org/abs/2207.11192

Github Link: https://github.com/sangyun884/blur-diffusion

Authors: Sangyun Lee Hyungjin Chung

Jaehyeon Kim Jong Chul Ye

Tag: inductive bias diffusion model

2207.11221 Domain Generalization for Activity Recognition via Adaptive Feature Fusion

Arxiv Link: http://arxiv.org/abs/2207.11221

Github Link: https://github.com/jindongwang/transferlearning

Authors: Xin Qin Jindong Wang Yiqiang

Chen Wang Lu Xinlong Jiang

Tag: domain adaptation domain

generalization feature fusion generalization

performance

2207.11231 Learning Unsupervised Hierarchies of Audio Concept

Arxiv Link: http://arxiv.org/abs/2207.11231

Github Link: https://github.com/deezer/concept_hierarchy

Authors: Darius Afchar Romain Hennequin

Vincent Guigue

Tag: computer vision

2207.11236 Twitmo: A Twitter Data Topic Modeling and Visualization Package fo

Arxiv Link: http://arxiv.org/abs/2207.11236

Github Link: https://github.com/abuchmueller/Twitmo

Authors: Andreas Buchmüller Gillian Kant

Christoph Weisser Benjamin Säfken

Krisztina Kis-Katos

Tag: latent dirichlet allocation

2207.11237 Defending Substitution-Based Profile Pollution Attacks on Sequential Recommende

Arxiv Link: http://arxiv.org/abs/2207.11237

Github Link: https://github.com/yueeeeeeee/recsys-substitution-defense

Authors: Zhenrui Yue Huimin Zeng Ziyi

Kou Lanyu Shang Dong Wang

Tag: recommender system probability

distribution adversarial attack convex

hull adversarial example adversarial

training

2207.11243 Multiface: A Dataset for Neural Face Rendering

Arxiv Link: http://arxiv.org/abs/2207.11243

Github Link: https://github.com/facebookresearch/multiface

Authors: Cheng-hsin Wuu Ningyuan Zheng

Scott Ardisson Rohan Bali Danielle

Belko

Tag: facial expression human face

ablation study

2207.11244 Deep Learning Hyperparameter Optimization for Breast Mass Detection in Mammogram

Arxiv Link: http://arxiv.org/abs/2207.11244

Github Link: https://github.com/aralab-unr/ga-mammograms

Authors: Adarsh Sehgal Muskan Sehgal

Hung Manh La George Bebis

Tag: genetic algorithm breast cancer

dl model deep learning

2207.11321 A flexible PageRank-based graph embedding framework closely related to spectral eigenvector embedding

Arxiv Link: http://arxiv.org/abs/2207.11321

Github Link: https://github.com/dishashur/log-pagerank

Authors: Disha Shur Yufan Huang David

F. Gleich

Tag:

2207.11327 earning from Multiple Annotator Noisy Labels via Sample-wise Label Fusion

Arxiv Link: http://arxiv.org/abs/2207.11327

Github Link: https://github.com/zhengqigao/learning-from-multiple-annotator-noisy-labels

Authors: Zhengqi Gao Fan-Keng Sun

Mingran Yang Sucheng Ren Zikai

Xiong

Tag: supervised learning deep learning

2207.11335 Generalizing Homophily to Simplicial Complexe

Arxiv Link: http://arxiv.org/abs/2207.11335

Github Link: https://github.com/arnabsarker/simplicialhomophily

Authors: Arnab Sarker Natalie Northrup

Ali Jadbabaie

Tag:

2207.11372 Evaluation of Different Annotation Strategies for Deployment of Parking Spaces Classification System

Arxiv Link: http://arxiv.org/abs/2207.11372

Github Link: https://github.com/andrehochuli/pklot-eval-annotations

Authors: Andre G. Hochuli Alceu S. Britto

Jr. Paulo R. L. de Almeida Williams B. S.

Alves Fabio M. C. Cagni

Tag: bounding box

2207.11463 When Counting Meets HMER: Counting-Aware Network for Handwritten Mathematical Expression Recognition

Arxiv Link: http://arxiv.org/abs/2207.11463

Github Link: https://github.com/lbh1024/can

Authors: Bohan Li Ye Yuan Dingkang

Liang Xiao Liu Zhilong Ji

Tag: benchmark dataset source code

attention mechanism

2207.11464 Learning Object Placement via Dual-path Graph Completion

Arxiv Link: http://arxiv.org/abs/2207.11464

Github Link: https://github.com/bcmi/graconet-object-placement

Authors: Siyuan Zhou Liu Liu Li

Niu Liqing Zhang

Tag: receptive field

2207.11482 Multimodal Emotion Recognition with Modality-Pairwise Unsupervised Contrastive Lo

Arxiv Link: http://arxiv.org/abs/2207.11482

Github Link: https://github.com/ricfrr/mpuc-mer

Authors: Riccardo Franceschini Enrico Fini

Cigdem Beyan Alessandro Conti Federica

Arrigoni

Tag: data fusion contrastive loss

benchmark dataset emotion recognition

supervised learning unsupervised learning

2207.11486 Time Series Prediction under Distribution Shift using Differentiable Forgetting

Arxiv Link: http://arxiv.org/abs/2207.11486

Github Link: https://github.com/jase-clarkson/pods_2022_icml_ts

Authors: Stefanos Bennett Jase Clarkson

Tag: time series distribution shift

predictive model

2207.11517 ontrastive Monotonic Pixel-Level Modulation

Arxiv Link: http://arxiv.org/abs/2207.11517

Github Link: https://github.com/lukun199/monopix

Authors: Kun Lu Rongpeng Li Honggang

Zhang

Tag: domain adaptation

2207.11518 Online Knowledge Distillation via Mutual Contrastive Learning for Visual Recognition

Arxiv Link: http://arxiv.org/abs/2207.11518

Github Link: https://github.com/winycg/mcl

Authors: Chuanguang Yang Zhulin An

Helong Zhou Yongjun Xu Qian

Zhan

Tag: mutual information student model

contrastive learning intermediate layer

representation learning image classification

knowledge distillation transfer learning

feature representation

2207.11521 Vaccine Discourse on Twitter During the COVID-19 Pandem

Arxiv Link: http://arxiv.org/abs/2207.11521

Github Link: https://github.com/gabriellindelof/vaccine-discourse-on-twitter-during-the-covid-19-pandemic

Authors: Gabriel Lindelöf Talayeh Aledavood

Barbara Keller

Tag: support vector machine

2207.11523 Unstructured Road Segmentation using Hypercolumn based Random Forests of Local expert

Arxiv Link: http://arxiv.org/abs/2207.11523

Github Link: https://github.com/prassanna-ravishankar/slither

Authors: Prassanna Ganesh Ravishankar Antonio M.

Lopez Gemma M. Sanchez

Tag: random forest convolutional neural

network neural network

2207.11530 Kellect: a Kernel-Based Efficient and Lossless Event Log Collecto

Arxiv Link: http://arxiv.org/abs/2207.11530

Github Link: https://github.com/acising/kellect

Authors: Tieming Chen Qijie Song

Xuebo Qiu Tiantian Zhu Zhiling

Zhu

Tag: event log anomaly detection

2207.11575 Testing the Robustness of Learned Index Structure

Arxiv Link: http://arxiv.org/abs/2207.11575

Github Link: https://github.com/bachfischer/logarithmicerrorregression

Authors: Matthias Bachfischer Renata

Borovica-Gajic Benjamin I. P. Rubinstein

Tag: linear regression

2207.11592 Thermal half-lives of azobenzene derivatives: virtual screening based on intersystem crossing using a machine learning potential

Arxiv Link: http://arxiv.org/abs/2207.11592

Github Link: https://github.com/learningmatter-mit/azo_barriers

Authors: Simon Axelrod Eugene Shakhnovich

Rafael Gomez-Bombarelli

Tag:

2207.11603 Experience with Abrupt Transition to Remote Teaching of Embedded System

Arxiv Link: http://arxiv.org/abs/2207.11603

Github Link: https://github.com/koniarik/teaching-embedded-remotely

Authors: Jan Koniarik Daniel Dlhopolcek

Martin Ukrop

Tag:

2207.11609 Exploring the Impact of Temporal Bias in Point-of-Interest Recommendation

Arxiv Link: http://arxiv.org/abs/2207.11609

Github Link: https://github.com/rahmanidashti/contextsfair

Authors: Hossein A. Rahmani Mohammadmehdi

Naghiaei Ali Tourani Yashar Deldjoo

Tag: contextual information recommender

system

2207.11637 Explored An Effective Methodology for Fine-Grained Snake Recognition

Arxiv Link: http://arxiv.org/abs/2207.11637

Github Link: https://github.com/aderonhuang/fgvc9_snakeclef2022

Authors: Yong Huang Aderon Huang Wei

Zhu Yanming Fang Jinghua Feng

Tag: supervised learning downstream task

computer vision self-supervised learning

2207.11640 Reliable amortized variational inference with physics-based latent distribution correction

Arxiv Link: http://arxiv.org/abs/2207.11640

Github Link: https://github.com/slimgroup/reliableavi.jl

Authors: Ali Siahkoohi Gabrio Rizzuti

Rafael Orozco Felix J. Herrmann

Tag: deep neural network posterior

distribution neural network variational

inference bayesian inference gaussian

distribution prior distribution distribution

shift kullback-leibler divergence

2207.11652 ounterfactual Reasoning for Out-of-distribution Multimodal Sentiment Analy

Arxiv Link: http://arxiv.org/abs/2207.11652

Github Link: https://github.com/teng-sun/clue_model

Authors: Teng Sun Wenjie Wang Liqiang

Jing Yiran Cui Xuemeng Song

Tag: sentiment analysis causal

inference

2207.11677 Learnable Privacy-Preserving Anonymization for Pedestrian Image

Arxiv Link: http://arxiv.org/abs/2207.11677

Github Link: https://github.com/whuzjw/privacy-reid

Authors: Junwu Zhang Mang Ye Yao

Yang

Tag: semantic information privacy

protection

2207.11680 No More Fine-Tuning? An Experimental Evaluation of Prompt Tuning in Code Intelligence

Arxiv Link: http://arxiv.org/abs/2207.11680

Github Link: https://github.com/adf1178/pt4code

Authors: Chaozheng Wang Yuanhang Yang

Cuiyun Gao Yun Peng Hongyu

Zhang

Tag: downstream task nlp task natural

language processing low-resource

2207.11685 Kernel Relative-prototype Spectral Filtering for Few-shot Learning

Arxiv Link: http://arxiv.org/abs/2207.11685

Github Link: https://github.com/zhangtao2022/dsfn

Authors: Tao Zhang Wu Huang

Tag: few-shot learning source code

imagenet dataset hilbert space

2207.11717 A Priority Map for Vision-and-Language Navigation with Trajectory Plans and Feature-Location Cue

Arxiv Link: http://arxiv.org/abs/2207.11717

Github Link: https://github.com/jasonarmitage-res/pm-vln

Authors: Jason Armitage Leonardo Impett

Rico Sennrich

Tag:

2207.11747 Self-dual polyhedral cones and their slack matrices

Arxiv Link: http://arxiv.org/abs/2207.11747

Github Link: https://github.com/bflourenco/self_dual_slacks

Authors: João Gouveia Bruno F. Lourenço

Tag:

2207.11759 Spatial-Temporal Federated Learning for Lifelong Person Re-identification on Distributed Edge

Arxiv Link: http://arxiv.org/abs/2207.11759

Github Link: https://github.com/MSNLAB/Federated-Lifelong-Person-ReID

Authors: Lei Zhang Guanyu Gao

Huaizheng Zhang

Tag: communication cost federated learning

lifelong learning

2207.11767 napshot Metrics Are Not Enough: Analyzing Software Repositories with Longitudinal Met

Arxiv Link: http://arxiv.org/abs/2207.11767

Github Link: https://github.com/softwaresystemslaboratory/prime

Authors: Nicholas Synovic Matt Hyatt

Rohan Sethi Sohini Thota

Shilpika

Tag: source code

2207.11769 CODiT: Conformal Out-of-Distribution Detection in Time-Series Dat

Arxiv Link: http://arxiv.org/abs/2207.11769

Github Link: https://github.com/kaustubhsridhar/time-series-ood

Authors: Ramneet Kaur Kaustubh Sridhar

Sangdon Park Susmit Jha Anirban

Roy

Tag: computer vision training distribution

safety-critical application time-series data

autonomous driving anomaly detection ood

detection autonomous vehicle

2207.11805 Weakly-Supervised Temporal Action Detection for Fine-Grained Videos with Hierarchical Atomic Action

Arxiv Link: http://arxiv.org/abs/2207.11805

Github Link: https://github.com/lizhi1104/haan

Authors: Zhi Li Lu He Huijuan

Xu

Tag: human behavior visual

representation

2207.11814 Object State Change Classification in Egocentric Videos using the Divided Space-Time Attention Mechanism

Arxiv Link: http://arxiv.org/abs/2207.11814

Github Link: https://github.com/md-mohaiminul/objectstatechange

Authors: Md Mohaiminul Islam Gedas

Bertasius

Tag: ablation study attention mechanism

negative example

2207.12405 Versatile Weight Attack via Flipping Limited Bit

Arxiv Link: http://arxiv.org/abs/2207.12405

Github Link: https://github.com/jiawangbai/versatile-weight-attack

Authors: Jiawang Bai Baoyuan Wu

Zhifeng Li Shu-tao Xia

Tag: deep neural network continuous

optimization adversarial attack neural

network

2207.12409 Automated discovery of interpretable gravitational-wave population model

Arxiv Link: http://arxiv.org/abs/2207.12409

Github Link: https://github.com/kazewong/symbolicgwpopulation_paper

Authors: Kaze W.K Wong Miles Cranmer

Tag: gaussian mixture model

2207.12496 NeuriCam: Video Super-Resolution and Colorization Using Key Frame

Arxiv Link: http://arxiv.org/abs/2207.12496

Github Link: https://github.com/vb000/neuricam

Authors: Bandhav Veluri Ali Saffari

Collin Pernu Joshua Smith Michael

Taylor

Tag: source code neural network

feature map real-time energy

consumption

2207.12534 Trainability Preserving Neural Structured Pruning

Arxiv Link: http://arxiv.org/abs/2207.12534

Github Link: https://github.com/mingsun-tse/tpp

Authors: Huan Wang Yun Fu

Tag: deep neural network deep network

neural network

2207.12538 Bayesian tensor factorization for predicting clinical outcomes using integrated human genetics evidence

Arxiv Link: http://arxiv.org/abs/2207.12538

Github Link: https://github.com/cx0/icml-human-genetics

Authors: Onuralp Soylemez

Tag: success rate

2207.12570 Seeing Far in the Dark with Patterned Flash

Arxiv Link: http://arxiv.org/abs/2207.12570

Github Link: https://github.com/zhsun0357/seeing-far-in-the-dark-with-patterned-flash

Authors: Zhanghao Sun Jian Wang

Yicheng Wu Shree Nayar

Tag: depth estimation image reconstruction

convolutional neural network neural

network

2207.12577 ompiler-Aware Neural Architecture Search for On-Mobile Real-time Super-Resolution

Arxiv Link: http://arxiv.org/abs/2207.12577

Github Link: https://github.com/wuyushuwys/compiler-aware-nas-sr

Authors: Yushu Wu Yifan Gong Pu

Zhao Yanyu Li Zheng Zhan

Tag: neural architecture search power

consumption application scenario

real-time image quality deep

learning neural architecture

2207.12600 earning Protein Representations via Complete 3D Graph Network

Arxiv Link: http://arxiv.org/abs/2207.12600

Github Link: https://github.com/divelab/DIG

Authors: Limei Wang Haoran Liu Yi

Liu Jerry Kurtin Shuiwang Ji

Tag: downstream task representation

learning

2207.12620 Large-displacement 3D Object Tracking with Hybrid Non-local Optimization

Arxiv Link: http://arxiv.org/abs/2207.12620

Github Link: https://github.com/cvbubbles/nonlocal-3dtracking

Authors: Xuhui Tian Xinran Lin Fan

Zhong Xueying Qin

Tag: real-time source code

2207.12628 Bundle MCR: Towards Conversational Bundle Recommendation

Arxiv Link: http://arxiv.org/abs/2207.12628

Github Link: https://github.com/aaronheee/bundle-mcr

Authors: Zhankui He Handong Zhao Tong

Yu Sungchul Kim Fan Du

Tag: user preference markov decision

process user interaction recommender

system

2207.12646 Learning Hierarchy Aware Features for Reducing Mistake Severity

Arxiv Link: http://arxiv.org/abs/2207.12646

Github Link: https://github.com/07agarg/haf

Authors: Ashima Garg Depanshu Sani

Saket Anand

Tag: source code

2207.12660 Bilateral Self-unbiased Learning from Biased Implicit Feedback

Arxiv Link: http://arxiv.org/abs/2207.12660

Github Link: https://github.com/jaewoong-lee/sigir_2022_biser

Authors: Jae-woong Lee Seongmin Park

Joonseok Lee Jongwuk Lee

Tag: recommender system

2207.12750 SPAIC: A Spike-based Artificial Intelligence Computing Framework

Arxiv Link: http://arxiv.org/abs/2207.12750

Github Link: https://github.com/ZhejianglabNCRC/SPAIC

Authors: Chaofei Hong Mengwen Yuan

Mengxiao Zhang Xiao Wang Chegnjun

Zhang

Tag: artificial intelligence deep learning

neural network

2207.12762 Productivity meets Performance: Julia on A64F

Arxiv Link: http://arxiv.org/abs/2207.12762

Github Link: https://github.com/giordano/julia-on-fugaku

Authors: Mosè Giordano Milan Klöwer

Valentin Churavy

Tag: programming language

2207.12767 teria Comparative Learning for Real-scene Image Super-Resolution

Arxiv Link: http://arxiv.org/abs/2207.12767

Github Link: https://github.com/house-leo/realsr-zero

Authors: Yukai Shi Hao Li Sen

Zhang Zhijing Yang Xiao Wang

Tag: low-resolution image computer vision

image patch vision task contrastive

loss contrastive learning

2207.12819 S-Prompts Learning with Pre-trained Transformers: An Occam's Razor for Domain Incremental Learning

Arxiv Link: http://arxiv.org/abs/2207.12819

Github Link: https://github.com/google-research/l2p

Authors: Yabin Wang Zhiwu Huang

Xiaopeng Hong

Tag: deep neural network continual learning

neural network

2207.12831 felong DP: Consistently Bounded Differential Privacy in Lifelong Machine Learning

Arxiv Link: http://arxiv.org/abs/2207.12831

Github Link: https://github.com/haiphanNJIT/PrivateDeepLearning

Authors: Phung Lai Han Hu NhatHai

Phan Ruoming Jin My T. Thai

Tag: differential privacy

2207.12850 Towards Smart City Security: Violence and Weaponized Violence Detection using DCNN

Arxiv Link: http://arxiv.org/abs/2207.12850

Github Link: https://github.com/Ti-Oluwanimi/Violence_Detection

Authors: Toluwani Aremu Li Zhiyuan

Reem Alameeri Moayad Aloqaily Mohsen

Guizani

Tag: real-time convolutional neural network

video frame neural network

2207.12859 ually explaining 3D-CNN predictions for video classification with an adaptive occlusion sensitivity analy

Arxiv Link: http://arxiv.org/abs/2207.12859

Github Link: https://github.com/uchiyama33/aosa

Authors: Tomoki Uchiyama Naoya Sogi

Koichiro Niinuma Kazuhiro Fukui

Tag: convolutional neural network neural

network decision-making process sensitivity

analysis image classification video

data

2207.12876 Repeated Environment Inference for Invariant Learning

Arxiv Link: http://arxiv.org/abs/2207.12876

Github Link: https://github.com/aamixsh/reiil

Authors: Aayush Mishra Anqi Liu

Tag:

2207.12877 Representing Random Utility Choice Models with Neural Network

Arxiv Link: http://arxiv.org/abs/2207.12877

Github Link: https://github.com/antoinedesir/rumnet

Authors: Ali Aouad Antoine Désir

Tag: utility function deep learning

neural network

2207.12883 Enhancing Collaborative Filtering Recommender with Prompt-Based Sentiment Analy

Arxiv Link: http://arxiv.org/abs/2207.12883

Github Link: https://github.com/hhhhzy/nlu_project

Authors: Elliot Dang Zheyuan Hu Tong

Li

Tag: sentiment analysis nlp model

prompt-based learning

2207.12899 ment of a cost-effective headphone calibration procedure for soundscape evaluation

Arxiv Link: http://arxiv.org/abs/2207.12899

Github Link: https://github.com/ntudsp/ica22-calibration

Authors: Bhan Lam Kenneth Ooi

Zhen-Ting Ong Karn N. Watcharasupat

Trevor Wong

Tag:

2207.12901 oss-Modality Image Registration using a Training-Time Privileged Third Modality

Arxiv Link: http://arxiv.org/abs/2207.12901

Github Link: https://github.com/qianyeyang/mpmrireg

Authors: Qianye Yang David Atkinson

Yunguan Fu Tom Syer Wen Yan

Tag: learning-based method

2207.12906 Searching on the boundary of abundance for odd weird numbers

Arxiv Link: http://arxiv.org/abs/2207.12906

Github Link: https://github.com/fwjmath/ows-data

Authors: Wenjie Fang

Tag:

2207.12934 A Reliable Online Method for Joint Estimation of Focal Length and Camera Rotation

Arxiv Link: http://arxiv.org/abs/2207.12934

Github Link: https://github.com/elderlab-york-university/onlinefr

Authors: Yiming Qian James H. Elder

Tag: camera pose

2207.12980 Efficient One Pass Self-distillation with Zipf's Label Smoothing

Arxiv Link: http://arxiv.org/abs/2207.12980

Github Link: https://github.com/megvii-research/zipfls

Authors: Jiajun Liang Linze Li

Zhaodong Bing Borui Zhao Yao

Tang

Tag:

2207.12994 \(^2\)L: Leveraging Vision and Vision-language Models into Large-scale Product Retrieval

Arxiv Link: http://arxiv.org/abs/2207.12994

Github Link: https://github.com/wangwenhao0716/v2l

Authors: Wenhao Wang Yifan Sun

Zongxin Yang Yi Yang

Tag: language model vision model

2207.13005 Hansel: A Chinese Few-Shot and Zero-Shot Entity Linking Benchmark

Arxiv Link: http://arxiv.org/abs/2207.13005

Github Link: https://github.com/imryanxu/hansel

Authors: Zhenran Xu Zifei Shan Yuxin

Li Baotian Hu Bing Qin

Tag: knowledge base

2207.13038 Text-Guided Synthesis of Artistic Images with Retrieval-Augmented Diffusion Model

Arxiv Link: http://arxiv.org/abs/2207.13038

Github Link: https://github.com/compvis/latent-diffusion

Authors: Robin Rombach Andreas Blattmann

Björn Ommer

Tag: source code synthesized image

diffusion model

2207.13048 Domain Adaptation under Open Set Label Shift

Arxiv Link: http://arxiv.org/abs/2207.13048

Github Link: https://github.com/acmi-lab/open-set-label-shift

Authors: Saurabh Garg Sivaraman

Balakrishnan Zachary C. Lipton

Tag: domain adaptation linear model

2207.13061 NewsStories: Illustrating articles with visual summarie

Arxiv Link: http://arxiv.org/abs/2207.13061

Github Link: https://github.com/newsstoriesdata/newsstories.github.io

Authors: Reuben Tan Bryan A. Plummer

Kate Saenko JP Lewis Avneesh

Sud

Tag: news article

2207.12194 omain Decorrelation with Potential Energy Ranking

Arxiv Link: http://arxiv.org/abs/2207.12194

Github Link: https://github.com/foreverps/poer

Authors: Sen Pei Jiaxi Sun Shiming

Xiang Gaofeng Meng

Tag: computer vision vision task

neural network domain shift domain

generalization deep learning

2207.12377 Confident Deep Learning loss function for one-step Conformal Prediction approximation

Arxiv Link: http://arxiv.org/abs/2207.12377

Github Link: https://github.com/juliameister/dl-confident-loss-function

Authors: Julia A. Meister Khuong An Nguyen

Stelios Kapetanakis Zhiyuan Luo

Tag: large-scale dataset mnist dataset

deep learning

2207.13091 VDL-Surrogate: A View-Dependent Latent-based Model for Parameter Space Exploration of Ensemble Simulation

Arxiv Link: http://arxiv.org/abs/2207.13091

Github Link: https://github.com/trainsn/vdl-surrogate

Authors: Neng Shi Jiayi Xu Hanqi

Guo Jonathan Woodring Han-Wei Shen

Tag: source code latent representation

surrogate model

2207.13129 V: Boosting Adversarial Example Transferability from Large Geometric Vicinity

Arxiv Link: http://arxiv.org/abs/2207.13129

Github Link: https://github.com/framartin/lgv-geometric-transferability

Authors: Martin Gubri Maxime Cordy

Mike Papadakis Yves Le Traon Koushik

Sen

Tag: adversarial attack adversarial example

surrogate model

2207.13159 TINYCD: A (Not So) Deep Learning Model For Change Detection

Arxiv Link: http://arxiv.org/abs/2207.13159

Github Link: https://github.com/andreacodegoni/tiny_model_4_cd

Authors: Andrea Codegoni Gabriele Lombardi

Alessandro Ferrari

Tag: source code lighting condition

real-time deep learning time

domain spatial feature

2207.13176 Exploring the Unprecedented Privacy Risks of the Metaverse

Arxiv Link: http://arxiv.org/abs/2207.13176

Github Link: https://github.com/metaguard/metadata

Authors: Vivek Nair Gonzalo Munilla Garrido

Dawn Song

Tag:

2207.13179 Unsupervised Learning under Latent Label Shift

Arxiv Link: http://arxiv.org/abs/2207.13179

Github Link: https://github.com/manleyroberts/lls-ddfa

Authors: Manley Roberts Pranav Mani

Saurabh Garg Zachary C. Lipton

Tag: matrix factorization unsupervised

learning distribution shift

2207.13227 Atomic structure generation from reconstructing structural fingerp

Arxiv Link: http://arxiv.org/abs/2207.13227

Github Link: https://github.com/fung-lab/structrepgen

Authors: Victor Fung Shuyi Jia Jiaxin

Zhang Sirui Bi Junqi Yin

Tag: generative model variational

autoencoder

2207.13235 Mid-level Representation Enhancement and Graph Embedded Uncertainty Suppressing for Facial Expression Recognition

Arxiv Link: http://arxiv.org/abs/2207.13235

Github Link: https://github.com/cruiseyugh/gus

Authors: Jie Lei Zhao Liu Zeyu

Zou Tong Li Xu Juan

Tag: facial expression representation

learning

2207.13247 oncurrent Subsidiary Supervision for Unsupervised Source-Free Domain Adaptation

Arxiv Link: http://arxiv.org/abs/2207.13247

Github Link: https://github.com/val-iisc/stickerda

Authors: Jogendra Nath Kundu Suvaansh

Bhambri Akshay Kulkarni Hiran Sarkar

Varun Jampani

Tag: domain shift domain adaptation

pretext task unsupervised domain

adaptation

2207.13254 ontextual Information and Commonsense Based Prompt for Emotion Recognition in Conversation

Arxiv Link: http://arxiv.org/abs/2207.13254

Github Link: https://github.com/deqingyang/cisper

Authors: Jingjie Yi Deqing Yang Siyu

Yuan Caiyan Cao Zhiyao Zhang

Tag: emotion recognition language model

contextual information

2207.13307 Marker and source-marker reprogramming of Most Permissive Boolean networks and ensembles with BoN

Arxiv Link: http://arxiv.org/abs/2207.13307

Github Link: https://github.com/bnediction/reprogramming-with-bonesis

Authors: Loïc Paulevé

Tag: fixed point dynamical system

2207.13320 Generator Knows What Discriminator Should Learn in Unconditional GAN

Arxiv Link: http://arxiv.org/abs/2207.13320

Github Link: https://github.com/naver-ai/ggdr

Authors: Gayoung Lee Hyunsu Kim Junho

Kim Seonghyeon Kim Jung-Woo Ha

Tag: u-net feature map semantic

representation

2207.13325 SiRi: A Simple Selective Retraining Mechanism for Transformer-based Visual Grounding

Arxiv Link: http://arxiv.org/abs/2207.13325

Github Link: https://github.com/qumengxue/siri-vg

Authors: Mengxue Qu Yu Wu Wu

Liu Qiqi Gong Xiaodan Liang

Tag:

2207.13353 One-Trimap Video Matting

Arxiv Link: http://arxiv.org/abs/2207.13353

Github Link: https://github.com/hongje/otvm

Authors: Hongje Seong Seoung Wug Oh

Brian Price Euntai Kim Joon-Young

Lee

Tag: source code information flow

2207.13374 Efficient Video Deblurring Guided by Motion Magnitude

Arxiv Link: http://arxiv.org/abs/2207.13374

Github Link: https://github.com/sollynoay/mmp-rnn

Authors: Yusheng Wang Yunfan Lu Ye

Gao Lin Wang Zhihang Zhong

Tag: motion blur recurrent neural network

neural network

2207.13378 Identifying Hard Noise in Long-Tailed Sample Distribution

Arxiv Link: http://arxiv.org/abs/2207.13378

Github Link: https://github.com/yxymessi/h2e-framework

Authors: Xuanyu Yi Kaihua Tang

Xian-Sheng Hua Joo-Hwee Lim Hanwang

Zhang

Tag: training distribution learning

framework

2207.13390 olutionary Multiparty Distance Minimization

Arxiv Link: http://arxiv.org/abs/2207.13390

Github Link: https://github.com/milabhitsz/2022sheoptmpnds3

Authors: Zeneng She Wenjian Luo Xin

Lin Yatong Chang Yuhui Shi

Tag: minimization problem

2207.13417 Hardly Perceptible Trojan Attack against Neural Networks with Bit Flip

Arxiv Link: http://arxiv.org/abs/2207.13417

Github Link: https://github.com/jiawangbai/hpt

Authors: Jiawang Bai Kuofeng Gao

Dihong Gong Shu-Tao Xia Zhifeng

Li

Tag: deep neural network additive noise

optimization algorithm neural network

imagenet dataset

2207.13424 Efficient Pix2Vox++ for 3D Cardiac Reconstruction from 2D echo view

Arxiv Link: http://arxiv.org/abs/2207.13424

Github Link: https://github.com/david-stojanovski/e-pix2vox-reconstruction

Authors: David Stojanovski Uxio Hermida

Marica Muffoletto Pablo Lamata Arian

Beqiri

Tag:

2207.13428 Leveraging GAN Priors for Few-Shot Part Segmentation

Arxiv Link: http://arxiv.org/abs/2207.13428

Github Link: https://github.com/hanmengya1996/pftgan

Authors: Mengya Han Heliang Zheng

Chaoyue Wang Yong Luo Han Hu

Tag: downstream task auto-encoder

2207.13475 PASTA-GAN++: A Versatile Framework for High-Resolution Unpaired Virtual Try-on

Arxiv Link: http://arxiv.org/abs/2207.13475

Github Link: https://github.com/xiezhy6/pasta-gan-plusplus

Authors: Zhenyu Xie Zaiyu Huang Fuwei

Zhao Haoye Dong Michael Kampffmeyer

Tag:

2207.13479 AutoTransition: Learning to Recommend Video Transition Effect

Arxiv Link: http://arxiv.org/abs/2207.13479

Github Link: https://github.com/acherstyx/autotransition

Authors: Yaojie Shen Libo Zhang Kai

Xu Xiaojie Jin

Tag: user study

2207.13513 earning with Combinatorial Optimization Layers: a Probabilistic Approach

Arxiv Link: http://arxiv.org/abs/2207.13513

Github Link: https://github.com/axelparmentier/inferopt.jl

Authors: Guillaume Dalle Léo Baty

Louis Bouvier Axel Parmentier

Tag: stochastic gradient stochastic gradient

descent gradient descent optimization

algorithm

2207.13543 Abstracting Sketches through Simple Primitive

Arxiv Link: http://arxiv.org/abs/2207.13543

Github Link: https://github.com/explainableml/sketch-primitives

Authors: Stephan Alaniz Massimiliano

Mancini Anjan Dutta Diego Marcos

Zeynep Akata

Tag: image retrieval

2207.13583 Towards the Neuroevolution of Low-level Artificial General Intelligence

Arxiv Link: http://arxiv.org/abs/2207.13583

Github Link: https://github.com/socratesnfr/neat-nagi-python

Authors: Sidney Pontes-Filho Kristoffer

Olsen Anis Yazidi Michael A. Riegler

Pål Halvorsen

Tag: curriculum learning network topology

artificial neural network neural network

2207.13674 Fast expansion into harmonics on the disk: a steerable basis with fast radial convolutions

Arxiv Link: http://arxiv.org/abs/2207.13674

Github Link: https://github.com/nmarshallf/fle_2d

Authors: Nicholas F. Marshall Oscar

Mickelin Amit Singer

Tag:

2207.13676 Open Source Vizier: Distributed Infrastructure and API for Reliable and Flexible Blackbox Optimization

Arxiv Link: http://arxiv.org/abs/2207.13676

Github Link: https://github.com/google/vizier

Authors: Xingyou Song Sagi Perel

Chansoo Lee Greg Kochanski Daniel

Golovin

Tag: transfer learning distributed

system

2207.13686 Shift-tolerant Perceptual Similarity Met

Arxiv Link: http://arxiv.org/abs/2207.13686

Github Link: https://github.com/abhijay9/shifttolerant-lpips

Authors: Abhijay Ghildyal Feng Liu

Tag: deep neural network reference image

neural network

2207.13702 Physical Systems Modeled Without Physical Law

Arxiv Link: http://arxiv.org/abs/2207.13702

Github Link: https://github.com/samchyams/physicsmodelingml

Authors: David Noever Samuel Hyams

Tag:

2207.13738 Break and Make: Interactive Structural Understanding Using LEGO Brick

Arxiv Link: http://arxiv.org/abs/2207.13738

Github Link: https://github.com/aaronwalsman/ltron

Authors: Aaron Walsman Muru Zhang

Klemen Kotar Karthik Desingh Ali

Farhadi

Tag: geometric structure

2207.13751 GAUDI: A Neural Architect for Immersive 3D Scene Generation

Arxiv Link: http://arxiv.org/abs/2207.13751

Github Link: https://github.com/apple/ml-gaudi

Authors: Miguel Angel Bautista Pengsheng

Guo Samira Abnar Walter Talbott

Alexander Toshev

Tag: camera pose generative model

latent representation

2207.13820 oss-Attention of Disentangled Modalities for 3D Human Mesh Recovery with Transforme

Arxiv Link: http://arxiv.org/abs/2207.13820

Github Link: https://github.com/postech-ami/fastmetro

Authors: Junhyeong Cho Kim Youwang

Tae-Hyun Oh

Tag: transformer encoder encoder-decoder

architecture

2207.13827 Declarative Smart Contract

Arxiv Link: http://arxiv.org/abs/2207.13827

Github Link: https://github.com/haoxianchen/declarative-smart-contracts

Authors: Haoxian Chen Gerald Whitters