This is fourth episode of series: TSP From DP to Deep Learning. In this episode, we systematically compare different searching algorithms for finding most likely sequence in the context of simplied markov chain setting. These models can be further utilized in deep learning decoding stage, which will be illustrated in reinforcement learning, in the next episode. Full list of this series is listed below.

Episode 1: AC TSP on AIZU with recursive DP

Episode 2: TSP DP on a Euclidean Dataset

Episode 3: Pointer Networks in PyTorch

Episode 4: Search for Most Likely Sequence

Problem as Markov Chain

In sequence-to-sequence problem, we are always faced with same problem of determining the best or most likely sequence of output. This kind of recurring problem exists extensively in algorithms, machine learning where we are given initial states and the dynamics of the system, and the goal is to find a path that is most likely. The corresponding concept, in science or mathematical discipline, is called Markov Chain.

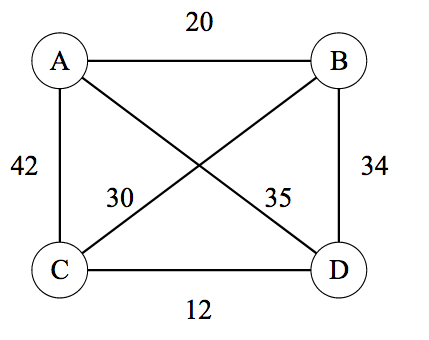

Let describe the problem in the context of markov chain. Suppose there are \(n\) states, and initial state is given by $s_0 = [0.35, 0.25, 0.4] $. The transition matrix is defined by \(T\) where $ T[i][j]$ denotes the probability of transitioning from \(i\) to \(j\). Notice that each row sums to \(1.0\). \[ T= \begin{matrix} & \begin{matrix}0&1&2\end{matrix} \\\\ \begin{matrix}0\\\\1\\\\2\end{matrix} & \begin{bmatrix}0.3&0.6&0.1\\\\0.4&0.2&0.4\\\\0.3&0.3&0.4\end{bmatrix}\\\\ \end{matrix} \]

Probability of the next state \(s_1\) is derived by multiplication of \(s_0\) and \(T\), which can be visually interpreted by animation below.

The actual probability distribution value of \(s_1\) is computed numerically below. Recall that left multiplying a row with a matrix amounts to making a linear combination of that row vector.

\[ s_1 = \begin{bmatrix}0.35& 0.25& 0.4\end{bmatrix} \begin{matrix} \begin{bmatrix}0.3&0.6&0.1\\\\0.4&0.2&0.4\\\\0.3&0.3&0.4\end{bmatrix}\\\\ \end{matrix} = \begin{bmatrix}0.325& 0.35& 0.255\end{bmatrix} \] Again, state \(s_2\) can be derived in the same way \(s_1 \times T\), where we assume the transitioning dynamics remains the same. However, in deep learning problem, the dynamics usually depends on \(s_i\), or vary according to the stage.

Suppose there are only 3 stages in our problem, e.g., \(s_0 \rightarrow s_1 \rightarrow s_2\). Let \(L\) be the number of stages and \(N\) be the number of vertices in each stage. Hence \(L=N=3\) in our problem setting. There could be \(N^L\) different paths starting from initial stage and to final stage.

Let us compute an arbitrary path probability as an example, \(2(s_0) \rightarrow 1(s_1) \rightarrow 2(s_2)\). The total probability is

\[ p(2 \rightarrow 1 \rightarrow 2) = s_0[2] \times T[2][1] \times T[1][2] = 0.4 \times 0.3 \times 0.4 = 0.048 \]

Exhaustive Search

First, we implement \(N^L\) exhaustive or brute force search.

The following Python 3 function returns one most likely sequence and its probability. Running the algorithm with our example produces 0.084 and route \(0 \rightarrow 1 \rightarrow 2\).

1 | def search_brute_force(initial: List, transition: List, L: int) -> Tuple[float, Tuple]: |

Greedy Search

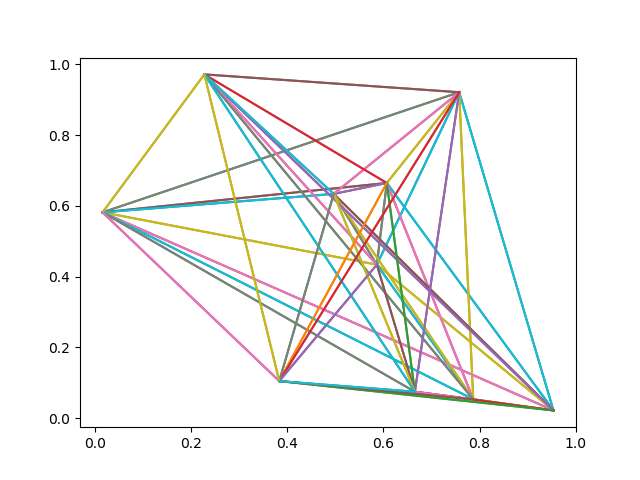

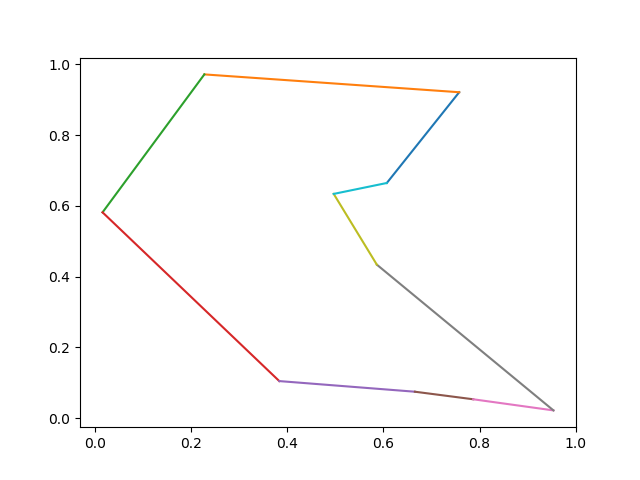

Exhaustive search always generates most likely sequence, as searching for a needle in the hay at the cost of exponential runtime complexity \(O(N^L)\). The simplest strategy, unknown as greedy, identifies one vertex in each stage and then expand the vertex in next stage. This strategy, of course, is not guaranteed to find most likely sequence but is fast. See animation below.

Code in Python 3 is given below. Numpy package is employed to utilize np.argmax() for code clarity. Notice there are 2 for loops (the other is np.argmax) so the runtime complexity is \(O(N\times L)\).

1 | def search_greedy(initial: List, transition: List, L: int) -> Tuple[float, Tuple]: |

Beam Search

We could improve greedy strategy a little bit by expanding more vertices in each stage. In beam search with \(k\) nodes, the strategy is in each stage, identify \(k\) nodes with highest probability and expand these \(k\) nodes into next stage. In our example, \(k=2\), we select first 2 nodes in stage \(s_0\), expand these 2 nodes and evaluate \(2 \times 3\) nodes in stage \(s_1\), then select 2 nodes and evaluate 6 nodes in stage \(s_2\). Beam search, similar to greedy strategy, is not guaranteed to find most likely sequence but it extends search space with linear complexity.

Below is implementation in Python 3 with PriorityQueue to select top \(k\) nodes. Notice in order to use reverse order of PriorityQueue, a class with @total_ordering is required to be defined. The runtime complexity is \(O(k\times N \times L)\) .

1 | def search_beam(initial: List, transition: List, L: int, K: int) -> Tuple[float, Tuple]: |

Viterbi DP

Similarly to TSP DP version, there is a dynamic programming approach, widely known as Viterbi algorithm, that always finds out sequence with max probability while reducing runtime complexity from \(O(N^L)\) to \(O(L \times N \times N)\) (corresponding to 3 loops in code below). The core idea is in each stage, an array keeps most likely sequence ending with each vertex and use the dp array as input to next stage. For example, let \(dp[1][0]\) be the most likely probability in \(s_1\) stage and end with vertex 0. \[ dp[1][0] = \max \\{s_0[0] \rightarrow s_1[0], s_0[1] \rightarrow s_1[0], s_0[2] \rightarrow s_1[0]\\} \]

Illustrative code that returns max probability but not route, in order to emphasize 3 loop pattern and max operation, honoring the essence of the algorithm.

1 | def search_dp(initial: List, transition: List, L: int) -> float: |

Probabilistic Sampling

All algorithms described above are deterministic. However, in NLP deep learning decoding, deterministic property has disadvantage in that it may get trapped into repeated phrases or sentences. For example, paragraph like below is commonly generated:

1 | This is the best of best of best of ... |

For demonstration purpose, here is the code based on greedy strategy which probabilistically determines one node at each stage.

1 | def search_prob_greedy(initial: List, transition: List, L: int) -> Tuple[float, Tuple]: |