This series, we deal with zero-sum turn-based board game algorithm, a sub type of combinatorial games. We start off with small search space problem, introduce classic algorithms and corresponding combinatorial gaming theory and ultimately end with modern approximating Deep RL techniques. From there, after stepping stone is laid, we are able to learn and appreciate how AlphaGo works. In this first episode, we illustrate 3 classic gaming problems in leetcode and solve them from brute force version to DP version then finally rewrite them using classic gaming algorithms, minimax and alpha beta pruning.

Episode 2: Tic-Tac-Toe Problems in Leetcode and Solve the Game using Minimax

Episode 3: Connect-N (Tic-Tac-Toe, Gomoku) OpenAI Gym GUI Environment

Episode 4: Connect-N (Tic-Tac-Toe, Gomoku) AlphaGo Zero Self-Play MCTS Reinforcement Learning

Leetcode 292 Nim Game (Easy)

Let's start with an easy Leetcode gaming problem, Leetcode 292 Nim Game.

You are playing the following Nim Game with your friend: There is a heap of stones on the table, each time one of you take turns to remove 1 to 3 stones. The one who removes the last stone will be the winner. You will take the first turn to remove the stones.

Both of you are very clever and have optimal strategies for the game. Write a function to determine whether you can win the game given the number of stones in the heap.

Example:

Input: 4

Output: false

Explanation: If there are 4 stones in the heap, then you will never win the game;

No matter 1, 2, or 3 stones you remove, the last stone will always be removed by your friend.

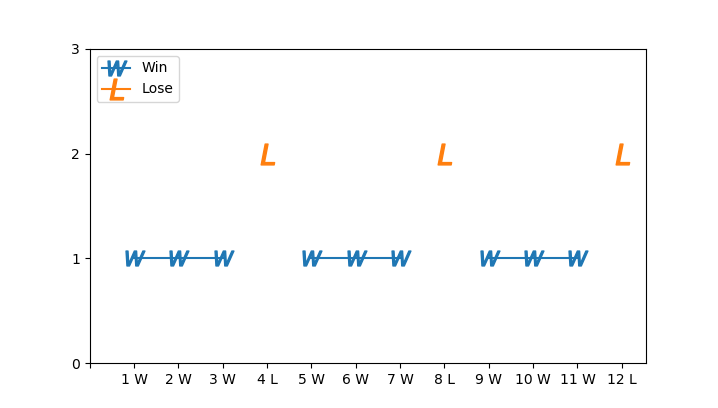

Let \(f(n)\) be the result, either Win or Lose, when you take turn to make optimal move for the case of \(n\) stones. The first non trial case is \(f(4)\). By playing optimal strategies, it is equivalent to saying if there is any chance that leads to Win, you will definitely choose it. So you try 1, 2, 3 stones and see whether your opponent has any chance to win. Obviously, \(f(1) = f(2) = f(3) = Win\). Therefore, \(f(4)\) is guranteed to lose. Generally, the recurrence relation is given by \[ f(n) = \neg (f(n-1) \land f(n-2) \land f(n-3)) \]

This translates straightforwardly to following Python 3 code.1 | # TLE |

1 | # RecursionError: maximum recursion depth exceeded in comparison n=1348820612 |

However, for this problem, lru_cache is not enough to AC because for large n, such as 1348820612, the implementation suffers from stack overflow. We can, of course, rewrite it in iterative forwarding loop manner. But still TLE.

1 | # TLE for 1348820612 |

So AC code requires at most sublinear complexity. The last version also gives us some intuition that win lose may have period of 4. Actually, if you arrange all \(f(n)\) one by one, it's obvious that any \(n \mod 4 = 0\) leads to Lose and other cases lead to Win. Why? Suppose you start with \(4k+i (i=1,2,3)\), you can always remove \(i\) stones and leave \(4k\) stones to your opponent. Whatever he chooses, you are returned with situation \(4k_1 + i_1 (i_1 = 1,2,3)\). This pattern repeats until you have 1, 2, 3 remaining stones.

Below is one liner AC version.

1 | # AC |

Leetcode 486 Predict the Winner (Medium)

Let's exercise a harder problem, Leetcode 486 Predict the Winner.

Given an array of scores that are non-negative integers. Player 1 picks one of the numbers from either end of the array followed by the player 2 and then player 1 and so on. Each time a player picks a number, that number will not be available for the next player. This continues until all the scores have been chosen. The player with the maximum score wins.

Given an array of scores, predict whether player 1 is the winner. You can assume each player plays to maximize his score.

Example 1:

Input: [1, 5, 2]

Output: False

Explanation: Initially, player 1 can choose between 1 and 2.

If he chooses 2 (or 1), then player 2 can choose from 1 (or 2) and 5. If player 2 chooses 5, then player 1 will be left with 1 (or 2).

So, final score of player 1 is 1 + 2 = 3, and player 2 is 5.

Hence, player 1 will never be the winner and you need to return False.

Example 2:

Input: [1, 5, 233, 7]

Output: True

Explanation: Player 1 first chooses 1. Then player 2 have to choose between 5 and 7. No matter which number player 2 choose, player 1 can choose 233.

Finally, player 1 has more score (234) than player 2 (12), so you need to return True representing player1 can win.

For a player, he can choose leftmost or rightmost one and leave remaining array to his opponent. Let us define maxDiff(l, r) to be the maximum difference current player can get, who is facing situation of subarray \([l, r]\).

\[ \begin{equation*} \operatorname{maxDiff}(l, r) = \max \begin{cases} nums[l] - \operatorname{maxDiff}(l + 1, r)\\\\ nums[r] - \operatorname{maxDiff}(l, r - 1) \end{cases} \end{equation*} \]

Runtime complexity can be written as following recurrence. \[ f(n) = 2f(n-1) = O(2^n) \]

Surprisingly, this time brute force version passed, but on the edge of rejection (6300ms).

1 | # AC |

Again, be aware we have repeated computation over same node, for example, [1-2] node is expanded entirely for the second time when going from root to right node. Applying the same lru_cache trick, the one liner decorating maxDiff, we passed again with runtime complexity \(O(n^2)\) and running time 43ms, trial change but substantial improvement!

1 | # AC |

Leetcode 464 Can I Win (Medium)

A similar but slightly difficult problem is Leetcode 464 Can I Win, where bit mask with DP technique is employed.

In the "100 game," two players take turns adding, to a running total, any integer from 1..10. The player who first causes the running total to reach or exceed 100 wins.

What if we change the game so that players cannot re-use integers?

For example, two players might take turns drawing from a common pool of numbers of 1..15 without replacement until they reach a total >= 100.

Given an integer maxChoosableInteger and another integer desiredTotal, determine if the first player to move can force a win, assuming both players play optimally.

You can always assume that maxChoosableInteger will not be larger than 20 and desiredTotal will not be larger than 300.

Example

Input:

maxChoosableInteger = 10

desiredTotal = 11

Output:

false

Explanation:

No matter which integer the first player choose, the first player will lose.

The first player can choose an integer from 1 up to 10.

If the first player choose 1, the second player can only choose integers from 2 up to 10.

The second player will win by choosing 10 and get a total = 11, which is >= desiredTotal.

Same with other integers chosen by the first player, the second player will always win.

1 | # AC |

Because there are \(2^m\) states and for each state we need to probe at most \(m\) options, so the overall runtime complexity is \(O(m 2^m)\), where m is maxChoosableInteger.

Minimax Algorithm

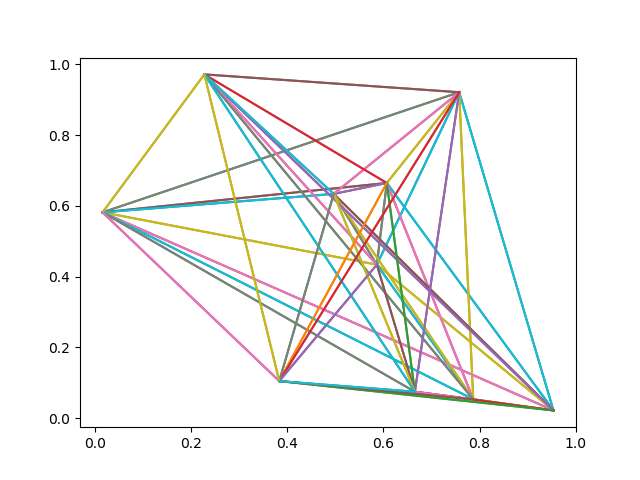

Up till now, we've seen serveral zero-sum turn based gaming in leetcode. In fact, there is more general algorithm for this type of gaming, named, minimax algorithm with alternate moves. The general setting is that, two players play in turn. The first player is trying to maximize game value and second player trying to minimize game value. For example, the following graph shows all nodes, labelled by its value. Computing from bottom up, the first player (max) can get optimal value -7, assuming both players play optimially.

Pseudo code in Python 3 is listed below.

1 | def minimax(node: Node, depth: int, maximizingPlayer: bool) -> int: |

Minimax: 486 Predict the Winner

We know leetcode 486 Predict the Winner is zero-sum turn-based game. Hence, theoretically, we can come up with a minimax algorithm for it. But the difficulty lies in how we define value or utility for it. In previous section, we've defined maxDiff(l, r) to be the maximum difference for current player, who is left with sub array \([l, r]\). In the most basic case, where only one element x is left, it's intuitive to define +x for max player and -x for min player. If we merge it with minimax algorithm, it's naturally follows that, the total reward got by max player is \(+a_1 + a_2 + ... = A\) and reward by min player is \(-b_1 - b_2 - ... = -B\), and max player aims to \(max(A-B)\) while min player aims to \(min(A-B)\). With that in mind, code is not hard to implement.

1 | # AC |

Minimax: 464 Can I Win

For this problem, as often processed in other win-lose-tie game without intermediate intrinsic value, it's typically to define +1 in case max player wins, -1 for min player and 0 for tie. Note the shortcut case for both player. For example, the max player can report Win (value=1) once he finds winning condition (>=desiredTotal) is satisfied during enumerating possible moves he can make. This also makes sense since if he gets 1 during maxing, there can not be other value for further probing that is finally returned. The same optimization will be generalized in the next improved algorithm, alpha beta pruning.

1 | # AC |

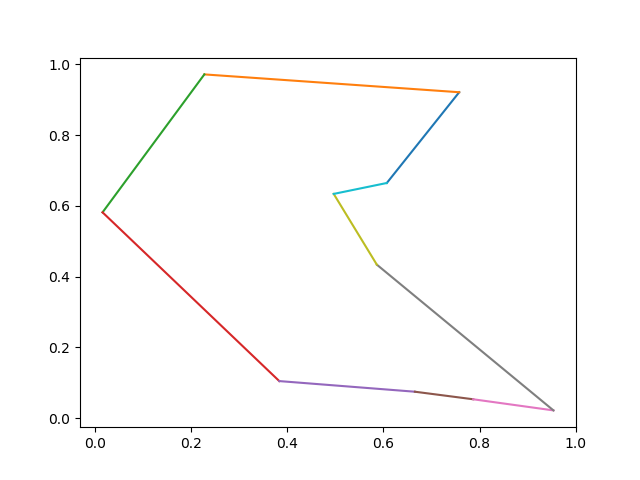

Alpha-Beta Pruning

We sensed there is space of optimaization during searching, as illustrated in 464 Can I Win minimax algorithm. Let's formalize this idea, called alpha beta pruning. For each node, we maintain two values alpha and beta, which represent the minimum score that the maximizing player is assured of and the maximum score that the minimizing player is assured of, respectively. The root node has initial alpha = −∞ and beta = +∞, forming valid duration [−∞, +∞]. During top down traversal, child node inherits alpha beta value from its parent node, for example, [alpha, beta], if the updated alpha or beta in the child node no longer forms a valid interval, the branch can be pruned and return immediately. Take following example in Wikimedia for example.

Root node, intially: alpha = −∞, beta = +∞

Root node, after 4 is returned, alpha = 4, beta = +∞

Root node, after 5 is returned, alpha = 5, beta = +∞

Rightmost Min node, intially: alpha = 5, beta = +∞

Rightmost Min node, after 1 is returned: alpha = 5, beta = 1

Here we see [5, 1] no longer is valid interval, so it returns without further probing his 2nd and 3rd child. Why? because if the other child returns value > 1, say 2, it will be replaced by 1 as it's a min node with guarenteed value 1. If the other child returns value < 1, it will be abandoned by root node, a max node, which has already guarenteed to have value >=5. So in this situation, whatever other children return does not impact anything.

Pseudo code in Python 3 is listed below.

1 | def alpha_beta(node: Node, depth: int, α: int, β: int, maximizingPlayer: bool) -> int: |

Alpha-Beta Pruning: 486 Predict the Winner

1 | # AC |

Alpha-Beta Pruning: 464 Can I Win

1 | # AC |

C++, Java, Javascript for 486 Predict the Winner

As a bonus, we AC leetcode 486 in C++, Java and Javascript with a bottom up iterative DP. We illustrate this method for other languages not just because lru_cache is available in non Python languages, but also because there are other ways to solve the problem. Notice the topological ordering of DP dependency, building larger DP based on smaller and solved ones. In addition, it's worth mentioning that this approach is guaranteed to have \(n^2\) loops but top down caching approach can have sub \(n^2\) loops.

Java AC Code

1 | // AC |

C++ AC Code

1 | // AC |

Javascript AC Code

1 | /** |